Researchers developed a technique to increase the frame rate of VR by synthesizing the second eye’s view instead of rendering it.

The paper is titled “You Only Render Once: Enhancing Energy and Computation Efficiency of Mobile Virtual Reality”, and it comes from two researchers from University of California San Diego, two from University of Colorado Denver, one from University of Nebraska–Lincoln, and one from Guangdong University of Technology.

Sample scenes running on a smartphone.

Stereoscopic rendering – the need to render a separate view for each eye – is one of the primary reasons that VR games are more difficult to run than flatscreen, leading to their lower relative graphical fidelity. This makes VR less appealing to many mainstream gamers.

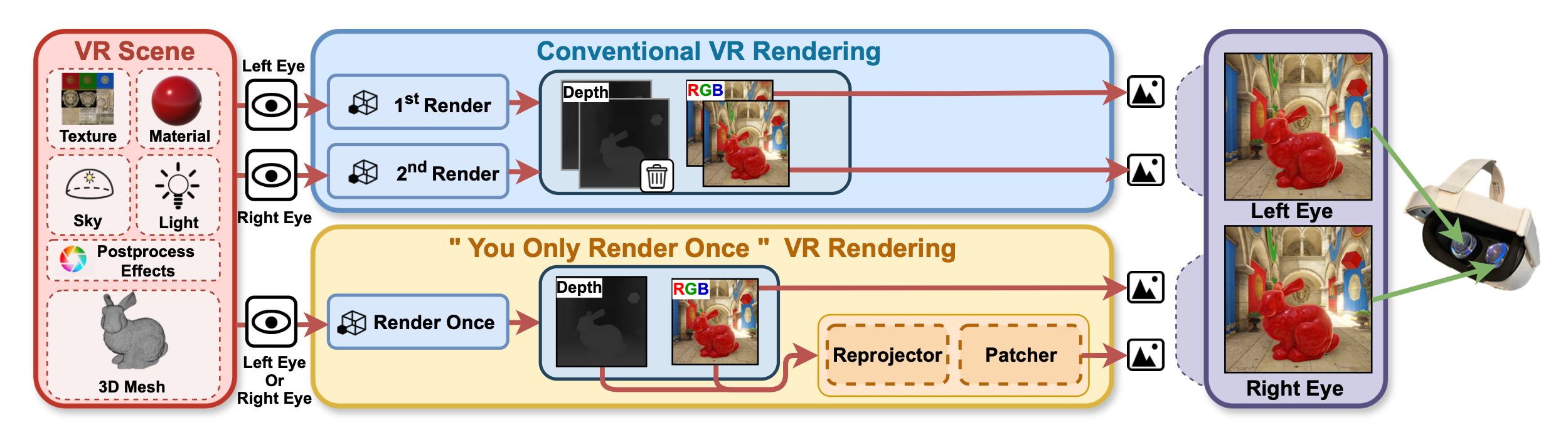

While standalone VR headsets like Quests can optimize the CPU draw calls of stereo rendering, through a technique called Single Pass Stereo, the GPU still has to render both eyes, including the expensive per-pixel shading and memory overhead.

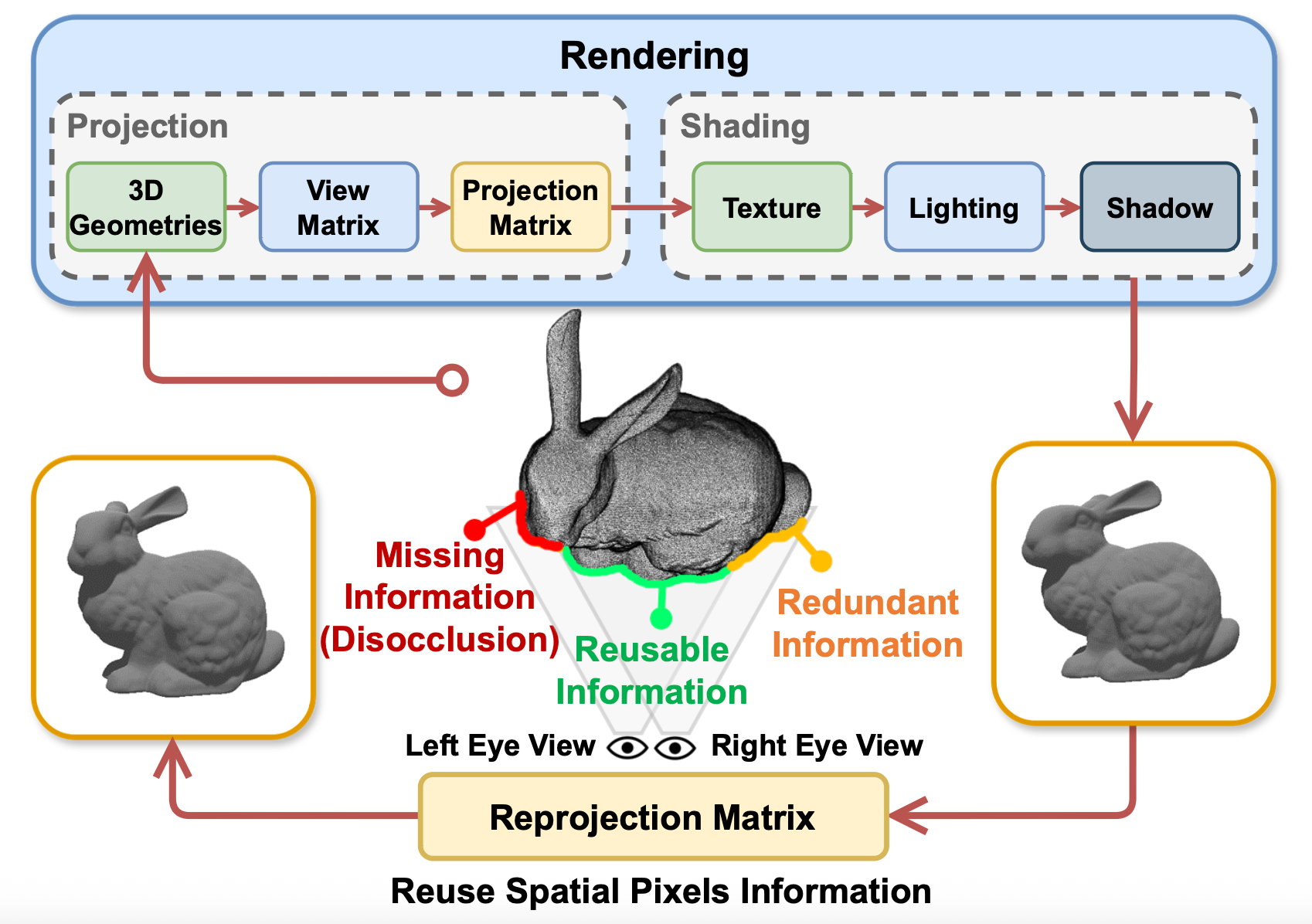

You Only Render Once (YORO) is a fascinating new approach. It starts by rendering one eye, as usual. But instead of rendering the other eye’s view at all, it efficiently synthesizes it through two stages: Reprojection and Patching.

To be clear, YORO is not an AI system. There is no neural network involved here, and thus there won’t be any AI hallucinations.

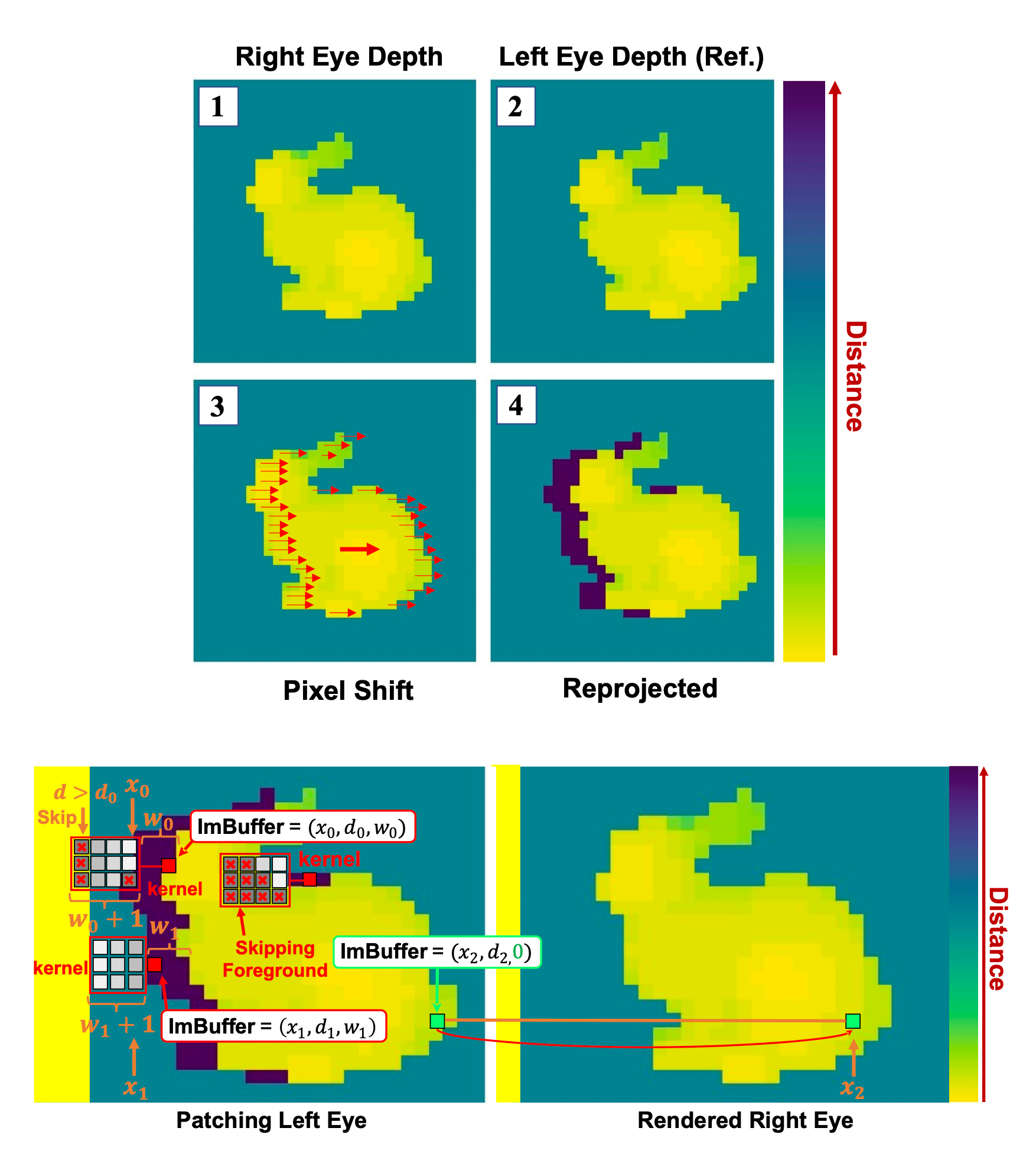

The Reprojection stage uses a compute shader to shift every pixel from the rendered eye into what would be the other eye’s screen space. It then marks which pixels are occluded, ie. not visible from the rendered eye.

That’s where the Patching stage comes in. It’s a lightweight image shader that applies a depth-aware in-paint filter to the occluded pixels marked by the Reprojection step, blurring background pixels into them.

The researchers claim that YORO decreases GPU rendering overhead by over 50%, and that the synthetic eye view is “visually lossless” in most cases.

In a real-world test on a Quest 2 running Unity’s VR Beginner: The Escape Room sample, implementing YORO increased the frame rate by 32%, from 62FPS to 82FPS.

The exception to this “visually lossless” synthesis is in the extreme near-field, meaning virtual objects that are very close to your eyes. Stereo disparity naturally increases the closer an object is to your eyes, so at some point the YORO approach just breaks down.

In this case, the researchers suggest simply falling back to regular stereo rendering. This can even be done for specific objects, ones very close to your eyes, rather than the whole scene.

A more significant limitation of YORO is that it does not support transparent geometry. However, the researchers note that transparency requires a second rendering pass on mobile GPUs anyways, so is rarely used, and suggest that YORO could be inserted before the transparent rendering pass.

The source code of a Unity implementation of YORO is available on GitHub, under the GNU General Public License (GPL). As such, it’s theoretically already possible for VR developers to implement this in their titles. But will platform-holders like Meta, ByteDance, and Apple adopt a technique similar to YORO at the SDK or system level? We’ll keep a close eye on these companies in coming months and years for any signs.

Source link

#YORO #Increases #Frame #Rates #Rendering #Eye #Synthesizing