Meta has revealed the first technical details of its new Horizon Engine.

Announced at Connect 2025 two weeks ago, Meta Horizon Engine is a new engine for Horizon Worlds and Quest’s new Immersive Home, where in both cases it replaces Unity.

The new Immersive Home is available as part of Horizon OS v81, currently only on the Public Test Channel (PTC), while in Horizon Worlds it’s available in worlds made using the upcoming Horizon Studio, which right now means only a few first-party worlds from Meta.

At Connect, Mark Zuckerberg said that Meta has spent “the last couple of years” building the new engine “from scratch”, and claimed that it brings 4x faster world loading and support for 100+ users in the same instance.

“This engine is fully optimized for bringing the metaverse to life. It is much faster performance and to load things, much better graphics, much easier to create with”, Zuckerberg said. But there wasn’t much in the way of specific technical details.

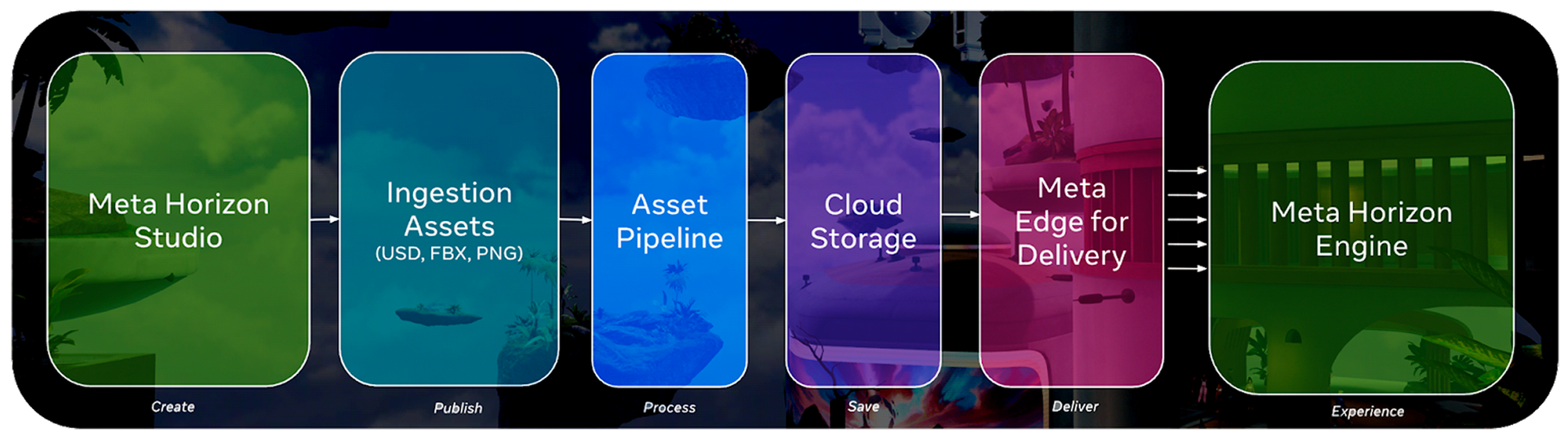

Now, Meta has published a blog post describing some details of Horizon Engine.

Horizon Engine “automatically scales from high-end cloud rendering to running on mobile phones”, Meta says, and can support “crowds of live avatars in a single shared space, expansive environments that can be streamed as sub-levels”, with “automatic management of object quality through automatically generated LODs”.

Here’s what Meta says about the core systems of Horizon Engine:

• Assets: A robust, data-driven, and creator-controlled asset pipeline that supports modern local workflows with standard tools and familiar middleware like PopcornFX for effects, FMOD for sound, Noesis for UI, and PhysX for physics.

• Audio: A spatialized audio system that stitches together experience sound, immersive media formats, and hybrid-mixed VoIP into a single immersive experience.

• Avatar: First-class integration of Meta Avatars, providing consistent embodiment and interaction behavior across the platform, and networked cross-instance crowd systems.

• Networking: A secure and scalable actor-based network topology that allows for low latency player-predicted interactions with server validation and creator-defined networked components.

• Rendering: A mobile and VR-first forward renderer, with a physically based shading model, built-in light baker, probe-defined lighting for dynamic objects, and a creator-defined material framework through a powerful, extensible, and stackable surface shader system.

• Resource Management: A resource manager, streaming system, and multi-threaded task scheduling framework to maintain the user experience by balancing quality and cost within the variable performance envelope of Quest and other platforms.

• Scripting: An extensible Typescript authoring environment to unify logic and control flow in worlds, with creator-defined components and clear entity lifecycles.

• Simulation: A data oriented ECS-based simulation system capable of efficiently simulating millions of networked entities.

It’s notable that Meta describes the engine as “running on mobile phones”. Horizon Worlds on smartphones is currently cloud streamed (poorly, in my experience), meaning each mobile session has a non-trivial cost to Meta, and requires a strong and consistent internet connection from the user. If the company moves to native rendering on phones, it could result in a more responsive and reliable experience, bolstering its ambitions of competing with the likes of Fortnite and Roblox.

Source link

#Meta #Reveals #Technical #Details #Horizon #Engine