For hundreds of years, Europeans agreed that the presence of a cuckoo egg was an awesome honor to a nesting fowl, because it granted a chance to exhibit Christian hospitality. The religious fowl enthusiastically fed her holy visitor, much more so than she would her personal (evicted) chicks (Davies, 2015). In 1859, Charles Darwin’s research of one other occasional brood parasite, finches, known as into query any rosy, cooperative view of fowl conduct (Darwin, 1859). With out contemplating the evolution of the cuckoo’s function, it could have been tough to acknowledge the nesting fowl not as a gracious host to the cuckoo chick, however as an unlucky dupe. The historic course of is crucial to understanding its organic penalties; as evolutionary biologist Theodosius Dobzhansky put it, Nothing in Biology Makes Sense Except in the Light of Evolution.

Definitely Stochastic Gradient Descent is just not actually organic evolution, however post-hoc evaluation in machine studying has a lot in common with scientific approaches in biology, and likewise usually requires an understanding of the origin of mannequin conduct. Due to this fact, the next holds whether or not parasitic brooding conduct or on the interior representations of a neural community: if we don’t contemplate how a system develops, it’s tough to differentiate a satisfying story from a helpful evaluation. On this piece, I’ll talk about the tendency in the direction of “interpretability creationism” – interpretability strategies that solely have a look at the ultimate state of the mannequin and ignore its evolution over the course of coaching – and suggest a give attention to the coaching course of to complement interpretability analysis.

Simply-So Tales

People are causal thinkers, so even once we don’t perceive the method that results in a trait, we have a tendency to inform causal tales. In pre-evolutionary folklore, animal traits have lengthy been defined via Lamarckian just-so stories like “How the Leopard Obtained His Spots”, which suggest the aim or reason for a trait with out the scientific understanding of evolution. We’ve many pleasing just-so tales in NLP as effectively, when researchers suggest an interpretable clarification of some noticed conduct regardless of its growth. For instance, a lot has been manufactured from interpretable artifacts reminiscent of syntactic attention distributions or selective neurons, however how can we all know if such a sample of conduct is definitely utilized by the mannequin? Causal modeling may help, however interventions to check the affect of specific options and patterns could goal solely specific varieties of conduct explicitly. In observe, it might be attainable solely to carry out sure varieties of slight interventions on particular models inside a illustration, failing to mirror interactions between options correctly.

Moreover, in staging these interventions, we create distribution shifts {that a} mannequin will not be sturdy to, no matter whether or not that conduct is a part of a core technique. Vital distribution shifts may cause erratic conduct, so why shouldn’t they trigger spurious interpretable artifacts? In observe, we discover no shortage of incidental observations construed as essential.

Fortuitously, the research of evolution has offered various methods to interpret the artifacts produced by a mannequin. Just like the human tailbone, they could have misplaced their unique operate and change into vestigial over the course of coaching. They might have dependencies, with some options and buildings counting on the presence of different properties earlier in coaching, just like the requirement for mild sensing earlier than a posh eye can develop. Different properties may compete with one another, as when an animal with a robust sense of scent depends much less on imaginative and prescient, subsequently shedding decision and acuity. Some artifacts may symbolize uncomfortable side effects of coaching, like how junk DNA constitutes a majority of our genetic code with out influencing our phenotypes.

We’ve various theories for the way unused artifacts may emerge whereas coaching fashions. For instance, the Information Bottleneck Hypothesis predicts how inputs could also be memorized early in coaching, earlier than representations are compressed to solely retain details about the output. These early memorized interpolations could not in the end be helpful when generalizing to unseen information, however they’re important with the intention to finally be taught to particularly symbolize the output. We can also contemplate the potential of vestigial options, as a result of early coaching conduct is so distinct from late coaching: earlier models are more simplistic. Within the case of language fashions, they behave similarly to ngram models early on and exhibit linguistic patterns later. Unwanted side effects of such a heterogeneous coaching course of might simply be mistaken for essential elements of a skilled mannequin.

The Evolutionary View

I could also be unimpressed by “interpretability creationist” explanations of static absolutely skilled fashions, however I’ve engaged in related evaluation myself. I’ve revealed papers on probing static representations, and the outcomes usually appear intuitive and explanatory. Nonetheless, the presence of a function on the finish of coaching is hardly informative concerning the inductive bias of a mannequin by itself! Take into account Lovering et al., who discovered that the convenience of extracting a function in the beginning of coaching, together with an evaluation of the finetuning information, has deeper implications for finetuned efficiency than we get by merely probing on the finish of coaching.

Allow us to contemplate an evidence often based mostly on analyzing static fashions: hierarchical conduct in language fashions. An instance of this method is the declare that words that are closely linked on a syntax tree have representations that are closer together, in comparison with phrases which are syntactically farther. How can we all know that the mannequin is behaving hierarchically by grouping phrases in line with syntactic proximity? Alternatively, syntactic neighbors could also be extra strongly linked as a consequence of a robust correlation between close by phrases as a result of they’ve increased joint frequency distributions. For instance, maybe constituents like “soccer match” are extra predictable as a result of frequency of their co-occurrence, in comparison with extra distant relations like that between “uncle” and “soccer” within the sentence, “My uncle drove me to a soccer match”.

The truth is, we might be extra assured that some language fashions are hierarchical, as a result of early fashions encode extra native data in LSTMs and Transformers, they usually be taught longer distance dependencies extra simply when these dependencies might be stacked onto short familiar constituents hierarchically.

An Instance

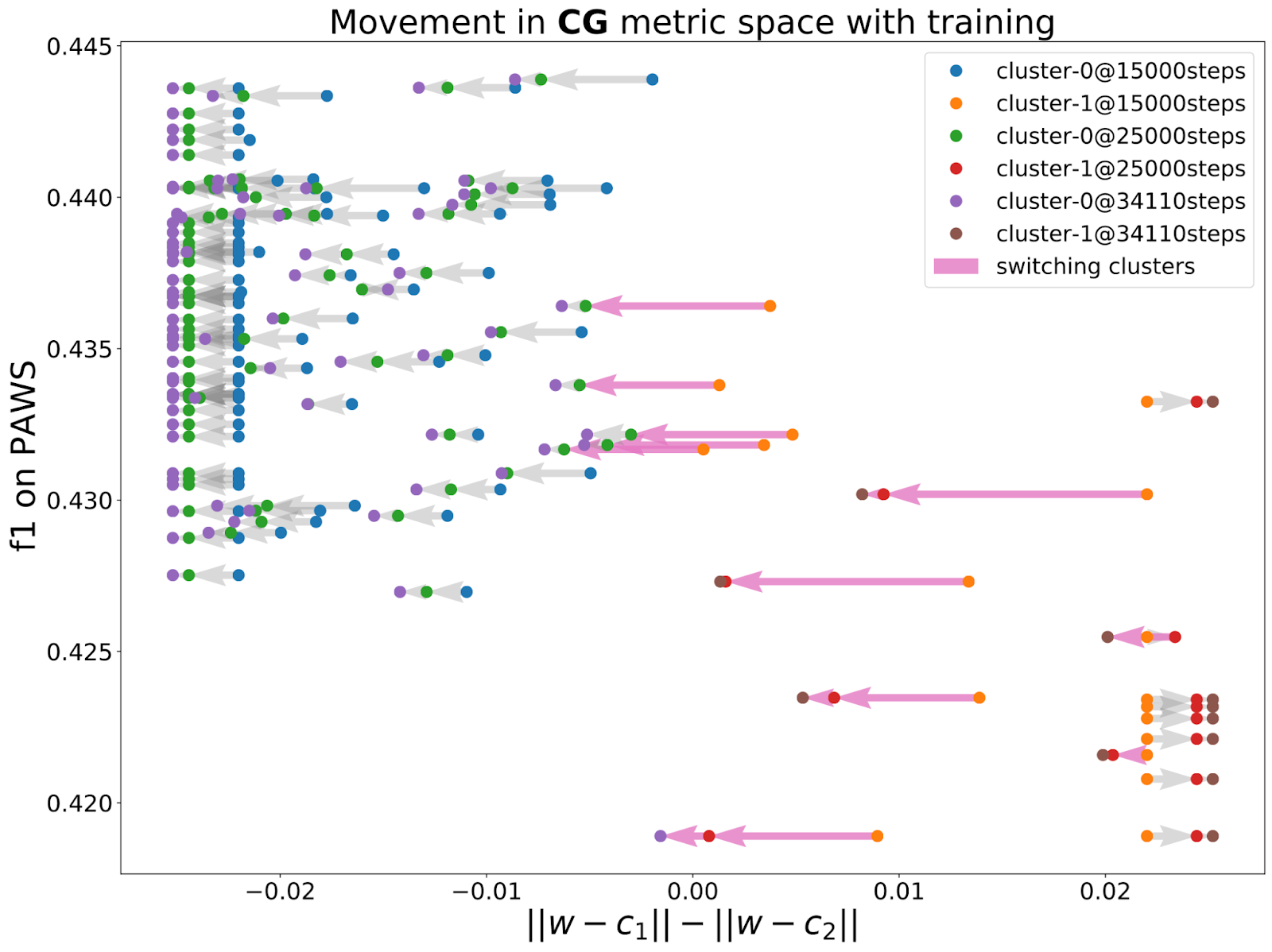

I just lately needed to handle the entice of interpretability creationism myself. My coauthors had discovered that, when coaching textual content classifiers repeatedly with totally different random seeds, models can occur in a number of distinct clusters. Additional, we might predict the generalization conduct of a mannequin based mostly on which different fashions it was related to on the loss floor. Now, we suspected that totally different finetuning runs discovered fashions with totally different generalization conduct as a result of their trajectories entered totally different basins on the loss floor.

However might we truly make this declare? What if one cluster truly corresponded to earlier levels of a mannequin? Finally these fashions would go away for the cluster with higher generalization, so our solely actual end result can be that some finetuning runs have been slower than others. We needed to reveal that coaching trajectories might truly change into trapped in a basin, offering an evidence for the variety of generalization conduct in skilled fashions. Certainly, once we checked out a number of checkpoints, we confirmed that fashions that have been very central to both cluster would change into much more strongly related to the remainder of their cluster over the course of coaching. Nonetheless, some fashions efficiently transition to a greater cluster. As a substitute of providing a just-so story based mostly on a static mannequin, we explored the evolution of noticed conduct to verify our speculation.

A Proposal

To be clear, not each query might be answered by solely observing the coaching course of. Causal claims require interventions! In biology, for instance, analysis about antibiotic resistance requires us to intentionally expose micro organism to antibiotics, moderately than ready and hoping to discover a pure experiment. Even the claims presently being made based mostly on observations of coaching dynamics could require experimental affirmation.

Moreover, not all claims require any remark of the coaching course of. Even to historic people, many organs had an apparent objective: eyes see, hearts pump blood, and brains are refrigerators. Likewise in NLP, simply by analyzing static fashions we are able to make easy claims: that specific neurons activate within the presence of specific properties, or that some varieties of data stay accessible inside a mannequin. Nonetheless, the coaching dimension can nonetheless make clear the which means of many observations made in a static mannequin.

My proposal is easy. Are you growing a way of interpretation or analyzing some property of a skilled mannequin? Don’t simply have a look at the ultimate checkpoint in coaching. Apply that evaluation to a number of intermediate checkpoints. If you’re finetuning a mannequin, examine a number of factors each early and late in coaching. If you’re analyzing a language mannequin, MultiBERTs, Pythia, and Mistral present intermediate checkpoints sampled from all through coaching on masked and autoregressive language fashions, respectively. Does the conduct that you simply’ve analyzed change over the course of coaching? Does your perception concerning the mannequin’s technique truly make sense after observing what occurs early in coaching? There’s little or no overhead to an experiment like this, and also you by no means know what you’ll discover!

Writer Bio

Naomi Saphra is a postdoctoral researcher at NYU with Kyunghyun Cho. Beforehand, she earned a PhD from the College of Edinburgh on Training Dynamics of Neural Language Models, labored at Google and Fb, and attended Johns Hopkins and Carnegie Mellon College. Outdoors of analysis, she play curler derby beneath the title Gaussian Retribution, does standup comedy, and shepherds disabled programmers into the world of code dictation.

Quotation:

For attribution in tutorial contexts or books, please cite this work as

Naomi Saphra, “Interpretability Creationism”, The Gradient, 2023.

BibTeX quotation:

@article{saphra2023interp,

writer = {Saphra, Naomi},

title = {Interpretability Creationism},

journal = {The Gradient},

12 months = {2023},

howpublished = {url{https://thegradient.pub/interpretability-creationism},

}

Source link

#Interpretability #Creationism

Unlock the potential of cutting-edge AI options with our complete choices. As a number one supplier within the AI panorama, we harness the facility of synthetic intelligence to revolutionize industries. From machine studying and information analytics to pure language processing and pc imaginative and prescient, our AI options are designed to boost effectivity and drive innovation. Discover the limitless prospects of AI-driven insights and automation that propel your enterprise ahead. With a dedication to staying on the forefront of the quickly evolving AI market, we ship tailor-made options that meet your particular wants. Be part of us on the forefront of technological development, and let AI redefine the best way you use and achieve a aggressive panorama. Embrace the long run with AI excellence, the place prospects are limitless, and competitors is surpassed.