On Monday, sheet music platform Soundslice says it developed a new feature after discovering that ChatGPT was incorrectly telling users the service could import ASCII tablature—a text-based guitar notation format the company had never supported. The incident reportedly marks what might be the first case of a business building functionality in direct response to an AI model’s confabulation.

Typically, Soundslice digitizes sheet music from photos or PDFs and syncs the notation with audio or video recordings, allowing musicians to see the music scroll by as they hear it played. The platform also includes tools for slowing down playback and practicing difficult passages.

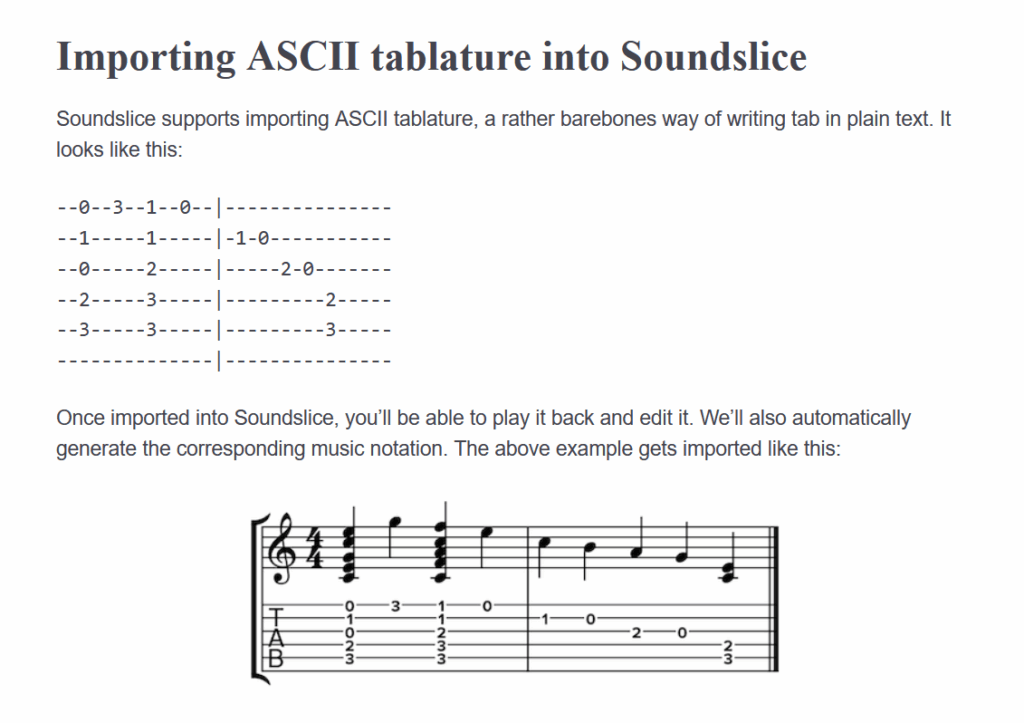

Adrian Holovaty, co-founder of Soundslice, wrote in a recent blog post that the recent feature development process began as a complete mystery. A few months ago, Holovaty began noticing unusual activity in the company’s error logs. Instead of typical sheet music uploads, users were submitting screenshots of ChatGPT conversations containing ASCII tablature—simple text representations of guitar music that look like strings with numbers indicating fret positions.

“Our scanning system wasn’t intended to support this style of notation,” wrote Holovaty in the blog post. “Why, then, were we being bombarded with so many ASCII tab ChatGPT screenshots? I was mystified for weeks—until I messed around with ChatGPT myself.”

When Holovaty tested ChatGPT, he discovered the source of the confusion: The AI model was instructing users to create Soundslice accounts and use the platform to import ASCII tabs for audio playback—a feature that didn’t exist. “We’ve never supported ASCII tab; ChatGPT was outright lying to people,” Holovaty wrote. “And making us look bad in the process, setting false expectations about our service.”

When AI models like ChatGPT generate false information with apparent confidence, AI researchers call it a “hallucination” or “confabulation.” The problem of AI models confabulating false information has plagued AI models since ChatGPT’s public release in November 2022, when people began erroneously using the chatbot as a replacement for a search engine.

Source link

#ChatGPT #product #feature #thin #air #company #created