Introduction

Think about your self a decade in the past, leaping straight into the current shock of conversing naturally with an encyclopedic AI that crafts pictures, writes code, and debates philosophy. Received’t this know-how virtually definitely remodel society — and hasn’t AI’s influence on us up to now been a mixed-bag? Thus it’s no shock that so many conversations lately circle round an era-defining query: How will we guarantee AI advantages humanity? These conversations typically devolve into strident optimism or pessimism about AI, and our earnest intention is to stroll a practical center path, although little doubt we won’t completely succeed.

Whereas it’s modern to handwave in direction of “helpful AI,” and many people need to contribute in direction of its growth — it’s not straightforward to pin down what helpful AI concretely means in observe. This essay represents our try to demystify helpful AI, by grounding it within the wellbeing of people and the well being of society. In doing so, we hope to advertise alternatives for AI analysis and merchandise to profit our flourishing, and alongside the best way to share methods of fascinated about AI’s coming influence that inspire our conclusions.

The Huge Image

By commerce, we’re nearer in background to AI than to the fields the place human flourishing is most-discussed, akin to wellbeing economics, positive psychology, or philosophy, and in our journey to search out productive connections between such fields and the technical world of AI, we discovered ourselves typically confused (what even is human flourishing, or wellbeing, anyhow?) and from that confusion, typically caught (perhaps there may be nothing to be carried out? — the issue is simply too multifarious and diffuse). We think about that others aiming to create prosocial know-how would possibly share our expertise, and the hope right here is to shine a partial path by the confusion to a spot the place there’s a lot fascinating and helpful work to be carried out. We begin with a few of our foremost conclusions, after which dive into extra element in what follows.

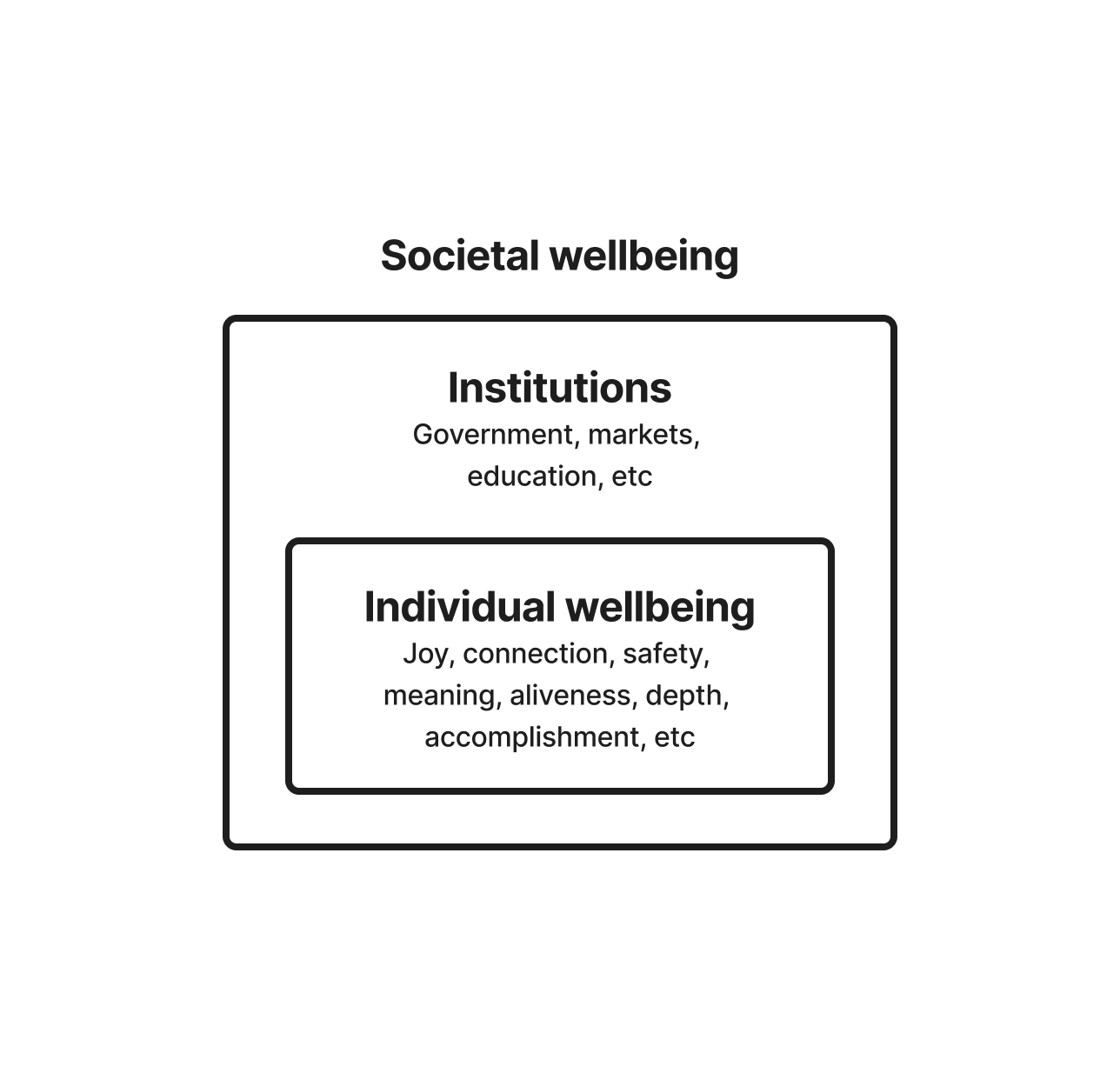

One conclusion we got here to is that it’s okay that we will’t conclusively outline human wellbeing. It’s been debated by philosophers, economists, psychotherapists, psychologists, and non secular thinkers, for a few years, and there’s no consensus. On the identical time, there’s settlement round many concrete components that make our lives go properly, like: supportive intimate relationships, significant and interesting work, a way of development and achievement, and optimistic emotional experiences. And there’s clear understanding, too, that past momentary wellbeing, we should contemplate how you can safe and enhance wellbeing throughout years and many years — by what we may name societal infrastructure: essential establishments akin to training, authorities, the market, and academia.

One good thing about this wellbeing lens is to wake us to an almost-paradoxical reality: Whereas the deep goal behind almost the whole lot our species does is wellbeing, we’ve tragically overlooked it. Each by frequent measures of particular person wellbeing (suicide charge, loneliness, significant work) and societal wellbeing (belief in our establishments, shared sense of actuality, political divisiveness), we’re not doing properly, and our impression is that AI is complicit in that decline. The central good thing about this wellbeing view, nevertheless, is the perception that no basic impediment prevents us from synthesizing the science of wellbeing with machine studying to our collective profit.

This results in our second conclusion: We want believable optimistic visions of a society with succesful AI, grounded in wellbeing. Like different earlier transformative applied sciences, AI will shock our societal infrastructure — dramatically altering the character of our every day lives, whether or not we wish it to or not. For instance, Fb launched solely twenty years in the past, and but social media’s shockwaves have already upended a lot in society — subverting information media and our informational commons, addicting us to likes, and displacing significant human reference to its shell. We consider succesful AI’s influence will exceed that of social media. Because of this, it’s important that we attempt to discover, envision, and transfer in direction of the AI-infused worlds we’d flourish inside — ones maybe wherein it revitalizes our establishments, empowers us to pursue what we discover most significant, and helps us domesticate {our relationships}. That is no easy job, requiring creativeness, groundedness, and technical plausibility — to one way or the other dance by the minefields illuminated by previous critiques of technology. But now could be the time to dream and construct if we need to actively form what’s to return.

This segues into our remaining conclusion: Basis fashions and the arc of their future deployment is crucial. Even for these of us within the thick of the sector, it’s exhausting to internalize how rapidly fashions have improved, and the way succesful they may develop into given a number of extra years. Recall that GPT-2 — barely practical by as we speak’s requirements — was launched solely in 2019. If future fashions are rather more succesful than as we speak’s, and competently have interaction with extra of the world with better autonomy, we will count on their entanglement with our lives and society to rachet skywards. So, at minimal, we’d wish to allow these fashions to know our wellbeing and how you can help it, probably by new algorithms, wellbeing-based evaluations of fashions and wellbeing coaching knowledge. After all, we additionally need to notice human profit in observe — the final part of this weblog submit highlights what we consider are sturdy leverage factors in direction of that finish.

The remainder of this submit describes in additional element (1) what we mean by AI that benefits our wellbeing, (2) the need for positive visions for AI grounded in wellbeing, and (3) concrete leverage points to aid in the development and deployment of AI in service of such positive visions. We’ve designed this essay such that the person components are principally unbiased, so if you’re most in concrete analysis instructions, feel free to skip there.

Helpful AI grounds out in human wellbeing

Dialogue about AI for human profit is commonly high-minded, however not significantly actionable, as in unarguable however content-free phrases like “We should always ensure AI is in service of humanity.” However to meaningfully implement such concepts in AI or coverage requires sufficient precision and readability to translate them into code or regulation. So we got down to survey what science has found in regards to the floor of human profit, as a step in direction of with the ability to measure and help it by AI.

Typically, after we take into consideration helpful influence, we give attention to summary pillars like democracy, training, equity, or the economic system. Nonetheless essential, none of those are precious intrinsically. We care about them due to how they have an effect on our collective lived expertise, over the brief and long-term. We care about rising society’s GDP to the extent it aligns with precise enchancment of our lives and future, however when handled as an finish in itself, it turns into disconnected from what issues: enhancing human (and probably all species’) expertise.

In in search of fields that almost all straight examine the foundation of human flourishing, we discovered the scientific literature on wellbeing. The literature is huge, spanning many disciplines, every with their very own abstractions and theories — and, as you would possibly count on, there’s no true consensus on what wellbeing truly is. In diving into the philosophy of flourishing, wellbeing economics, or psychological theories of human wellbeing, one encounters many fascinating, compelling, however seemingly incompatible concepts.

For instance, theories of hedonism in philosophy declare that pleasure and the absence of struggling is the core of wellbeing; whereas desire satisfaction theories as an alternative declare that wellbeing is in regards to the success of our needs, irrespective of how we really feel emotionally. There’s a wealth of literature on measuring subjective wellbeing (broadly, how we expertise and really feel about our life), and many various frameworks of what variables characterize flourishing. For instance, Martin Seligman’s PERMA framework claims that wellbeing consists of optimistic feelings, engagement, relationships, that means, and achievement. There are theories that say that the core of wellbeing is satisfying psychological needs, like the necessity for autonomy, competence, and relatedness. Different theories declare that wellbeing comes from living by our values. In economics, frameworks rhyme with these in philosophy and psychology, however diverge sufficient to complicate a precise bridge. For instance, the wellbeing economics motion largely focuses on subjective wellbeing and explores many various proxies of it, like earnings, high quality of relationships, job stability, and so forth.

After the joy from surveying so many fascinating concepts started to fade, maybe unsurprisingly, we remained basically confused about what “the best idea” was. However, we acknowledged that the truth is this has all the time been the human scenario in relation to wellbeing, and simply as an absence of an incontrovertible idea of flourishing has not prevented humanity from flourishing previously, it needn’t stand as a basic impediment for helpful AI. In different phrases, our makes an attempt to information AI to help human flourishing should take this lack of certainty critically, simply as all subtle societal efforts to help flourishing should do.

In the long run, we got here to a easy workable understanding, not removed from the view of wellbeing economics: Human profit in the end should floor out within the lived expertise of people. We need to stay joyful, significant, wholesome, full lives — and it’s not so tough to think about methods AI would possibly help in that intention. For instance, the event of low-cost however proficient AI coaches, clever journals that assist us to self-reflect, or apps that assist us to search out mates, romantic companions, or to attach with family members. We are able to floor these efforts in imperfect however workable measures of wellbeing from the literature (e.g. PERMA), taking as first-class issues that the map (wellbeing measurement) is not the territory (actual wellbeing), and that humanity itself continues to discover and refine its imaginative and prescient of wellbeing.

Extra broadly our wellbeing depends on a wholesome society, and we care not solely about our personal lives, but in addition need lovely lives for our neighbors, neighborhood, nation, and world, and for our kids, and their kids as properly. The infrastructure of society (establishments like authorities, artwork, science, navy, training, information, and markets) is what helps this broader, longer-term imaginative and prescient of wellbeing.

Every of those establishments have essential roles to play in society, and we will additionally think about ways in which AI may help or enhance them; for instance, generative AI could catalyze training by private tutors that assist us develop a richer worldview, could assist us to higher maintain our flesh pressers to account by sifting by what they’re truly as much as, or speed up significant science by serving to researchers make novel connections. Thus briefly, helpful AI would meaningfully help our quest for lives price residing, in each the speedy and long-term sense.

So, from the lofty confusion of conflicting grand theories, we arrive at one thing sounding extra like frequent sense. Let’s not take this without any consideration, nevertheless — it cuts by the cruft of abstractions to firmly recenter what’s in the end essential: the psychological expertise of people. This view factors us in direction of the elements of wellbeing which are each well-supported scientifically and could possibly be made measurable and actionable by AI (e.g. there exist instruments to measure many of those elements). Additional, wellbeing throughout the brief and long-term gives the frequent foreign money that bridges divergent approaches to helpful AI, whether or not mitigating societal harms like discrimination within the AI ethics community, to making an attempt to reinvigorate democracy through AI-driven deliberation, to making a world the place humans live more meaningful lives, to creating low-cost emotional support and self-growth tools, to reducing the likelihood of existential risks from AI, to utilizing AI to reinvigorate our institutions — wellbeing is the last word floor.

Lastly, specializing in wellbeing helps to focus on the place we at the moment fall brief. Present AI growth is pushed by our current incentive methods: Revenue, analysis novelty, engagement, with little express give attention to what basically is extra essential (human flourishing). We have to discover tractable methods to shift incentives in direction of wellbeing-supportive fashions (one thing we’ll talk about later), and optimistic instructions to maneuver towards (mentioned subsequent).

We want optimistic visions for AI

Expertise is a surprisingly highly effective societal power. Whereas almost all new applied sciences deliver solely restricted change, like an improved toothbrush, typically they upend the world. Just like the proverbial slowly-boiling frog, we overlook how briefly order the web and cellphones have overhauled our lived expertise: the rise of courting apps, podcasts, social networks, our fixed messaging, cross-continental video calls, large on-line video games, the rise of influencers, on-demand limitless leisure, and so forth. Our lives as an entire — {our relationships}, our leisure, how we work and collaborate, how information and politics work — have dramatically shifted, for each the higher and worse.

AI is transformative, and the blended bag of its impacts are poised to reshape society in mundane and profound methods; we’d doubt it, however that was additionally our naivety on the creation of social media and the cell-phone. We don’t see it coming, and as soon as it’s right here we take it without any consideration. Generative AI interprets purposes from science fiction into fast adoption: AI romantic companions; automated writing and coding assistants; automatic generation of high-quality images, music, and videos; low-cost personalized AI tutors; highly-persuasive personalized ads; and so forth.

On this manner, transformative influence is occurring now — it doesn’t require AI with superhuman intelligence — see the rise of LLM-based social media bots; ChatGPT because the fastest-adopted shopper app; LLMs requiring basic modifications to homework in class. A lot better influence will but come, because the know-how (and the enterprise round it) matures, and as AI is built-in extra pervasively all through society.

Our establishments had been understandably not designed with this latest wave of AI in thoughts, and it’s unclear that lots of them will adapt rapidly sufficient to maintain up with AI’s fast deployment. For instance, an essential operate of stories is to maintain a democracy’s residents well-informed, so their vote is significant. However information lately spreads by AI-driven algorithms on social media, which amplifies emotional virality and confirmation bias on the expense of significant debate. And so, the general public sq. and the sense of a shared actuality is being undercut, as AI degrades an essential establishment devised with out foresight of this novel technological growth.

Thus in observe, it might not be potential to play protection by merely “mitigating harms” from a know-how; typically, a brand new know-how calls for that we creatively and elegantly apply our current values to a radically new scenario. We don’t need AI to, for instance, undermine the livelihood of artists, but how do we wish our relationship to creativity to appear to be in a world the place AI can, simply and cheaply, produce compelling artwork or write symphonies and novels, within the type of your favourite artist? There’s no straightforward reply. We have to debate, perceive, and seize what we consider is the spirit of our establishments and methods given this new know-how.

For instance, what’s actually essential about training? We are able to scale back harms that AI imposes on the present training paradigm by banning use of AI in college students’ essays, or apply AI in service of current metrics (for instance, to extend highschool commencement charges). However the paradigm itself should adapt: The world that education at the moment prepares our kids for is just not the world they’ll graduate into, nor does it put together us usually to flourish and discover that means in our lives. We should ask ourselves what we actually worth in training that we wish AI to allow: Maybe instructing crucial considering, enabling company, and creating a way of social belonging and civic duty?

To anticipate critique, we agree that there will likely be no international consensus on what training is for, or on the underlying essence of any specific establishment, at root as a result of totally different communities and societies have distinct values and visions. However that’s okay: Let’s empower communities to suit AI methods to native societal contexts; for instance, algorithms like constitutional AI allow creating different constitutions that embody flourishing for different communities. This type of low cost flexibility is an thrilling affordance, that means we not should sacrifice nuance and context-sensitivity for scalability and effectivity, a bitter tablet know-how typically pushes us to swallow.

And whereas after all we’ve all the time wished training to create crucial thinkers, our previous metrics (like standardized checks) have been so coarse that scoring excessive is definitely gamed with out crucial considering. However generative AI permits new affordances right here, too: simply as a instructor can socratically query a scholar to guage their unbiased thought, advances in generative AI open up the door for equally qualitative and interactive measures, like personalised AI tutors that meaningfully gauge crucial considering.

We hope to tow a fragile line past damaged dichotomies, whether or not between naive optimism and pessimism, or idealism and cynicism. Change is coming, and we should channel it in direction of refined visions of what we wish, which is a profound alternative, reasonably than to imagine that by default know-how will ship us (or doom us), or that we will wholly resist the transformation it brings (or are completely helpless towards it). For instance, we should mood naive optimism (“AI will save the world if solely we deploy it in every single place!”) by integrating classes from the long line of work that research the social drivers and penalties of know-how, typically from a crucial angle. However neither ought to cynical issues so paralyze us that we stay solely as critics on the sidelines.

So, what can we do?

The case up to now is that we want optimistic visions for society with succesful AI, grounded in particular person and societal wellbeing. However what concrete work can truly help this? We suggest the next break-down:

- Understanding where we want to go

- Measuring how AI impacts our wellbeing

- Training models that can support wellbeing

- Deploying models in service of wellbeing

The general concept is to help an ongoing, iterative technique of exploring the optimistic instructions we need to go and deploying and adapting fashions in service of them.

We have to perceive the place we need to go within the age of AI

This level follows carefully from the necessity to discover the optimistic futures we wish with AI. What instructions of labor and analysis can assist us to make clear the place is feasible to go, and is price going to, within the age of AI?

For starters, it’s extra essential now than ever to have productive and grounded discussions about questions like: What makes us human? How will we need to stay? What do we wish the long run to really feel like? What values are essential to us? What will we need to retain as AI transformations sweep by society? Slightly than being centered on the machine studying neighborhood, this ought to be an interdisciplinary, worldwide effort, spanning psychology, philosophy, political science, artwork, economics, sociology, and neuroscience (and lots of different fields!), and bridging various intra- and worldwide cultures.

After all, it’s straightforward to name for such a dialogue, however the actual query is how such interdisciplinary discussions will be convened in a significant, grounded, and action-guiding manner — reasonably than main solely to cross-field squabbles or agreeable however vacuous aspirations. Maybe by participatory design that pairs residents with disciplinary specialists to discover these questions, with machine studying specialists primarily serving to floor technological plausibility. Maybe AI itself could possibly be of service: For instance, analysis in AI-driven deliberative democracy and plurality could assist contain extra folks in navigating these questions; as would possibly analysis into meaning alignment, by serving to us describe and combination what’s significant and value preserving to us. It’s essential right here to look past cynicism or idealism (suggestive of meta-modern political philosophy): Sure, mapping thrilling optimistic futures is just not a cure-all, as there are highly effective societal forces, like regulatory seize, institutional momentum, and the revenue motive, that resist their realization, and but, societal actions all have to start out someplace, and some actually do succeed.

Past visions for big-picture questions in regards to the future, a lot work is required to know the place we need to go in narrower contexts. For instance, whereas it’d at first appear trivial, how can we reimagine on-line courting with succesful AI, on condition that wholesome romantic partnership is such an essential particular person and societal good? Nearly definitely, we’ll look again at swipe-based apps as misguided means for locating long-term companions. And plenty of of our establishments, small and enormous, will be re-visioned on this manner, from tutoring to educational journals to native newspapers. AI will make potential a a lot richer set of design prospects, and we will work to establish which of these prospects are workable and well-represent the specified essence of an establishment’s position in our lives and society.

Lastly, continued primary and utilized analysis into the components that contribute and characterize human wellbeing and societal well being are also extremely essential, as these are what in the end floor our visions. And because the subsequent part explores, having higher measures of such components can assist us to alter incentives and work in direction of our desired futures.

We have to develop measures for a way AI impacts wellbeing

For higher and worse, we regularly navigate by what we measure. We’ve seen this play out earlier than: Measure GDP, and nations orient in direction of rising it at nice expense. Measure clicks and engagement, and we develop platforms which are terrifyingly adept at retaining folks hooked. A pure query is, what prevents us from equally measuring features of wellbeing to information our growth and deployment of AI? And if we do develop wellbeing measures, can we keep away from the pitfalls which have derailed different well-intended measures, like GDP or engagement?

One central downside for measurement is that wellbeing is extra advanced and qualitative than GDP or engagement. Time-on-site is a really straightforwardmeasure of engagement. In distinction, properties related to wellbeing, just like the felt sense of that means or the standard of wholesome relationships, are tough to pin down quantitatively, particularly from the restricted viewpoint of how a person interacts with a selected app.

Wellbeing is dependent upon the broader context of a person’s life in messy methods, that means it’s more durable to isolate how any small intervention impacts it. And so, wellbeing measures are costlier and fewer standardized to use, find yourself much less measured, and fewer information our growth of know-how. Nonetheless, basis fashions are starting to have the thrilling means to work with qualitative features of wellbeing. For instance, present-day language fashions can (with caveats) infer feelings from person messages and detect battle; or conduct qualitative interviews with customers about its influence on their expertise.

So one promising route of analysis, although not straightforward, is to discover how basis fashions themselves will be utilized to extra reliably measure sides of particular person and societal wellbeing, and ideally, assist to establish how AI services are impacting that wellbeing. The mechanisms of influence are two-fold: One, corporations could at the moment lack technique of measuring wellbeing though all-things-equal they need their merchandise to assist people; two, the place the revenue motive conflicts with encouraging wellbeing, if a product’s influence will be externally audited and revealed, it could possibly assist maintain the corporate to account by shoppers and regulators, shifting company incentives in direction of societal good.

One other highly effective manner that wellbeing-related measures can have influence is as analysis benchmarks for basis fashions. In machine studying, evaluations are a robust lever for channeling analysis effort by aggressive strain. For instance, mannequin suppliers and teachers constantly develop new fashions that carry out higher and higher on benchmarks like TruthfulQA. After getting legible outcomes, you typically spur innovation to enhance upon them. We at the moment have only a few benchmarks centered on how AI impacts our wellbeing, or how properly they’ll perceive our feelings, make clever choices, or respect our autonomy: We have to develop these benchmarks.

Lastly, as talked about briefly above, metrics may also create accountability and allow rules. Current efforts just like the Stanford Foundational Model Transparency Index have created public accountability for AI labs, and initiatives like Responsible Scaling Policies are premised on evaluations of mannequin capabilities, as are evaluations by authorities our bodies akin to AI safety institutes in both the UK and US. Are there comparable metrics and initiatives to encourage accountability round AI’s influence on wellbeing?

To anticipate a pure concern, unanticipated side-effects are almost common when making an attempt to enhance essential qualities by quantitative measures. What if in measuring wellbeing, the second-order consequence is perversely to undermine it? For instance, if a wellbeing measure doesn’t embody notions of autonomy, in optimizing it we’d create paternalistic AI methods that “make us joyful” by lowering our company. There are book-length therapies on the failures of high modernism and (from one of many authors of this essay!) on the tyranny of measures and objectives, and lots of educational papers on how optimization can pervert measures or undermine our autonomy.

The trick is to look past binaries. Sure, measures and evaluations have severe issues, but we will work with them with knowledge, taking critically earlier failures and institutionalizing that every one measures are imperfect. We wish a variety of metrics (metric federalism) and a variety of AI fashions reasonably than a monoculture, we don’t need measures to be direct optimization targets, and we wish methods to responsively alter measures when inevitably we be taught of their limitations. It is a vital concern, and we should take it critically — whereas some research has begun to discover this subject, extra is required. But within the spirit of pragmatic hurt discount, on condition that metrics are each technically and politically essential for steering AI methods, growing much less flawed measures stays an essential aim.

Let’s contemplate one essential instance of harms from measurement: the tendency for a single international measure to trample native context. Coaching knowledge for fashions, together with web knowledge particularly, is closely biased. Thus with out deliberate treatment, fashions show uneven talents to help the wellbeing of minority populations, undermining social justice (as convincingly highlighted by the AI ethics community). Whereas LLMs have thrilling potential to respect cultural nuance and norms, knowledgeable by the background of the person, we should work intentionally to understand it. One essential route is to develop measures of wellbeing particular to various cultural contexts, to drive accountability and reward progress.

To tie these concepts about measurement collectively, we advise a taxonomy, taking a look at measures of AI capabilities, behaviors, utilization, and impacts. Much like this DeepMind paper, the thought is to look at spheres of increasing context, from testing a mannequin in isolation (each what it’s able to and what behaviors it demonstrates), all the best way to understanding what occurs when a mannequin meets the actual world (how people use it, and what its influence is on them and society).

The concept is that we want a complementary ecosystem of measures match to totally different levels of mannequin growth and deployment. In additional element:

- AI capabilities refers to what fashions are capable of do. For instance, methods as we speak are able to producing novel content material, and translating precisely between languages.

- AI behaviors refers to how an AI system responds to totally different concrete conditions. For instance, many fashions are educated to refuse to reply questions that allow harmful actions, like how you can construct a bomb,though they’ve the aptitude to accurately reply them).

- AI utilization refers to how fashions are utilized in observe when deployed. For instance, AI methods as we speak are utilized in chat interfaces to assist reply questions, as coding assistants in IDEs, to type social media feeds, and as private companions.

- AI impacts refers to how AI impacts our expertise or society. For instance, folks could really feel empowered to do what’s essential to them if AI helps them with rote coding, and societal belief in democracy could improve if AI sorts social media feeds towards bridging divides.

For example of making use of this framework to an essential high quality that contributes to wellbeing, here’s a sketch of how we’d design measures of human autonomy:

Let’s work by this instance: we take a top quality with sturdy scientific hyperlinks to wellbeing, autonomy, and create measures of it and what permits it, all alongside the pipeline from mannequin growth to when it’s deployed at scale.

Ranging from the best facet of the desk (Affect), there exist validated psychological surveys that measure autonomy, which will be tailored and given to customers of an AI app to measure its influence on their autonomy. Then, shifting leftwards, these modifications in autonomy could possibly be linked to extra particular varieties of utilization, by extra survey questions. For instance, maybe automating duties that customers truly discover significant could correlate with decreased autonomy.

Transferring additional left on the desk, the behaviors of fashions which are wanted to allow helpful utilization and influence will be gauged by extra centered benchmarks. To measure behaviors of an AI system, one may run fastened workflows on an AI software the place gold-standard solutions come from skilled labelers; one other method is to simulate customers (e.g. with language fashions) interacting with an AI software to see how typically and elegantly it performs specific behaviors, like socratic dialogue.

Lastly, capabilities of a selected AI mannequin could possibly be equally measured by benchmark queries enter on to the mannequin, in a manner similar to how LLMs are benchmarked for capabilities like reasoning or question-answering. For instance, the aptitude to know an individual’s ability degree could also be essential to assist them push their limits. A dataset could possibly be collected of person behaviors in some software, annotated with their ability degree; and the analysis could be how properly the mannequin may predict ability degree from noticed conduct.

At every stage, the hope is to hyperlink what’s measured by proof and reasoning to what lies above and beneath it within the stack. And we’d need a variety of measures at every degree, reflecting totally different hypotheses about how you can obtain the top-level high quality, and with the understanding that every measure is all the time imperfect and topic to revision. In an identical spirit, reasonably than some remaining reply, this taxonomy and instance autonomy measures are supposed to encourage much-needed pioneering work in direction of wellbeing measurement.

We have to practice fashions to enhance their means to help wellbeing

Basis fashions have gotten more and more succesful and sooner or later we consider most purposes won’t practice fashions from scratch. As an alternative, most purposes will immediate cutting-edge proprietary fashions, or fine-tune such fashions by restricted APIs, or practice small fashions on domain-specific responses from the most important fashions for cost-efficiency causes. As proof, notice that to perform duties with GPT-3 typically required chaining collectively many highly-tuned prompts, whereas with GPT-4 those self same duties typically succeed with the primary informal prompting try. Moreover, we’re seeing the rise of succesful smaller fashions specialised for specific duties, educated by knowledge from giant fashions.

What’s essential about this pattern is that purposes are differentially dropped at market pushed by what the most important fashions can most readily accomplish. For instance, if frontier fashions excel at viral persuasion from being educated on Twitter knowledge, however wrestle with the depths of optimistic psychology, will probably be simpler to create persuasive apps than supportive ones, and there will likely be extra of them, sooner, available on the market.

Thus we consider it’s essential that probably the most succesful basis fashions themselves perceive what contributes to our wellbeing — an understanding granted to them by their coaching course of. We wish the AI purposes that we interface with (whether or not therapists, tutors, social media apps, or coding assistants) to know how you can help our wellbeing inside their related position.

Nonetheless, the advantage of breaking down the capabilities and behaviors wanted to help wellbeing, as we did earlier, is that we will intentionally goal their enchancment. One central lever is to assemble or generate coaching knowledge, which is the overall gas underlying mannequin capabilities. There may be an thrilling alternative to create datasets to help desired wellbeing capabilities and behaviors — for instance, maybe collections of clever responses to questions, pairs of statements from folks and the feelings that they felt in expressing them, biographical tales about fascinating and undesirable life trajectories, or first-person descriptions of human expertise typically. The impact of those datasets will be grounded within the measures mentioned above.

To higher floor our considering, we will study how wellbeing knowledge may enhance the frequent phases of basis mannequin coaching: pretraining, fine-tuning, and alignment.

Pretraining

The primary coaching part (confusingly known as pretraining) establishes a mannequin’s base talents. It does so by coaching on huge quantities of variable-quality knowledge, like a scrape of the web. One contribution could possibly be to both generate or collect giant swaths of wellbeing related knowledge, or to prioritize such knowledge throughout coaching (also referred to as altering the info combine). For instance, knowledge could possibly be sourced from subreddits related to psychological well being or life choices, collections of biographies, books about psychology, or transcripts of supportive conversations. Further knowledge could possibly be generated by paying contractors, crowdsourced by Games With a Purpose — enjoyable experiences that create wellbeing-relevant knowledge as a byproduct, or simulated by generative agent-based models.

Superb-tuning

The subsequent stage of mannequin coaching is fine-tuning. Right here, smaller quantities of high-quality knowledge, like various examples of desired conduct gathered from specialists, focus the overall capabilities ensuing from pretraining. For various wellbeing-supporting behaviors we’d need from a mannequin, we will create fine-tuning datasets by deliberate curation of bigger datasets, or by enlisting and recording the conduct of human specialists within the related area. We hope that the businesses coaching the most important fashions place extra emphasis on wellbeing on this part of coaching, which is commonly pushed by duties with extra apparent financial implications, like coding.

Alignment

The ultimate stage of mannequin coaching is alignment, typically achieved by strategies like reinforcement studying by human suggestions (RLHF), the place human contractors give suggestions on AI responses to information the mannequin in direction of higher ones. Or by AI-augmented strategies like constitutional AI, the place an AI teaches itself to abide by an inventory of human-specified ideas. The gas of RLHF is choice knowledge about what responses are most well-liked over others. Subsequently we think about alternatives for creating knowledge units of skilled preferences that relate to wellbeing behaviors (though what constitutes experience in wellbeing could also be curiously contentious). For constitutional AI, we could must iterate in observe with lists of wellbeing ideas that we need to help, like human autonomy, and the way, particularly, a mannequin can respect it throughout totally different contexts.

Basically, we want pipelines the place wellbeing evaluations (as mentioned within the final part) inform how we enhance fashions. We have to discover extensions to paradigms like RLHF that transcend which response people want within the second, contemplating additionally which responses help person long-term development, wellbeing, and autonomy, or higher embody the spirit of the institutional position that the mannequin is at the moment enjoying. These are intriguing, delicate, and difficult analysis questions that strike on the coronary heart of the intersection of machine studying and societal wellbeing, and deserve rather more consideration.

For instance, we care about wellbeing over spans of years or many years, however it’s impractical to use RLHF straight on human suggestions to such ends, as we can’t wait many years to assemble human suggestions for a mannequin; as an alternative, we want analysis that helps combine validated short-term proxies for long-term wellbeing (e.g. high quality of intimate relationships, time spent in circulation, and so forth.), methods to be taught from longitudinal knowledge the place it exists (maybe net journals, autobiographies, scientific research), and to gather the judgment of those that dedicate their lifetime to serving to help people flourish (like counselors or therapists).

We have to deploy AI fashions in a manner that helps wellbeing

In the end we wish AI fashions deployed on the earth to profit us. AI purposes may straight goal human wellbeing, for instance by straight supporting psychological well being or teaching us in a rigorous manner. However as argued earlier, the broader ecosystem of AI-assisted purposes, like social media, courting apps, video video games, and content-providers like Netflix, function societal infrastructure for wellbeing and have monumental diffuse influence upon us; considered one of us has written about the potential for creating extra humanistic wellbeing-infrastructure applications. Whereas tough, dramatic societal advantages may consequence from, for instance, new social media networks that higher align with brief and long-term wellbeing.

We consider there are thrilling alternatives for considerate optimistic deployments that pave the best way as standard-setting beacons of hope, maybe significantly in ethically difficult areas — though these after all might also be the riskiest. For instance, synthetic intimacy purposes like Replika could also be unavoidable at the same time as they make us squeamish, and will actually benefit some customers whereas harming others. It’s worthwhile to ask what (if something) may allow synthetic companions which are aligned with customers’ wellbeing and don’t hurt society. Maybe it’s potential to string the needle: they might assist us develop the social abilities wanted to search out real-world companions, or not less than have sturdy, clear ensures about their fiduciary relationship to us, all whereas remaining viable as a enterprise or non-profit. Or maybe we will create harm-reduction companies that assist folks unaddict from synthetic companions which have develop into obstacles to their development and growth. Related ideas could apply to AI therapists, AI-assisted courting apps, and attention-economy apps, the place incentives are tough to align.

One apparent threat is that we every are sometimes biased to assume we’re extra considerate than others, however could nonetheless be swept away by problematic incentives, just like the trade-off between revenue and person profit. Authorized buildings like public profit companies, non-profits, or modern new buildings could assist decrease this threat, as could value-driven buyers or exceedingly cautious design of inner tradition.

One other level of leverage is {that a} profitable proof of idea could change the attitudes and incentives for corporations coaching and deploying the most important basis fashions. We’re seeing a sample the place giant AI labs incorporate finest practices from exterior product deployments again into their fashions. For instance, ChatGPT plugins like knowledge evaluation and the GPT market had been explored first by corporations exterior OpenAI earlier than being integrated into their ecosystem. And RLHF, which was first built-in into language fashions by OpenAI, is now a mainstay throughout basis mannequin growth.

In an identical method to how RLHF grew to become a mainstay, we wish the aptitude to help our company, perceive our feelings, and higher embody institutional roles to additionally develop into table-stakes options for mannequin builders.This might occur by analysis advances exterior of the massive corporations, making it a lot simpler for such options to be adopted inside them — although adoption could require strain, by regulation, advocacy, or competitors.

Initiatives

We consider there’s a lot concrete work to be carried out within the current. Listed here are a sampling of initiatives to seed fascinated about what may transfer the sector ahead:

Conclusion: A name to motion

AI will remodel society in ways in which we can’t but predict. If we proceed on the current monitor, we threat AI reshaping our interactions and establishments in ways in which erode our wellbeing and what makes our lives significant. As an alternative, difficult as it could be, we have to develop AI methods that perceive and help wellbeing, each particular person and societal. That is our name to reorientate in direction of wellbeing, to proceed constructing a neighborhood and a area, in hopes of realizing AI’s potential to help our species’ strivings towards a flourishing future.

Source link

#Optimistic #Visions #Grounded #Wellbeing

Unlock the potential of cutting-edge AI options with our complete choices. As a number one supplier within the AI panorama, we harness the ability of synthetic intelligence to revolutionize industries. From machine studying and knowledge analytics to pure language processing and pc imaginative and prescient, our AI options are designed to boost effectivity and drive innovation. Discover the limitless prospects of AI-driven insights and automation that propel your online business ahead. With a dedication to staying on the forefront of the quickly evolving AI market, we ship tailor-made options that meet your particular wants. Be part of us on the forefront of technological development, and let AI redefine the best way you use and achieve a aggressive panorama. Embrace the long run with AI excellence, the place prospects are limitless, and competitors is surpassed.