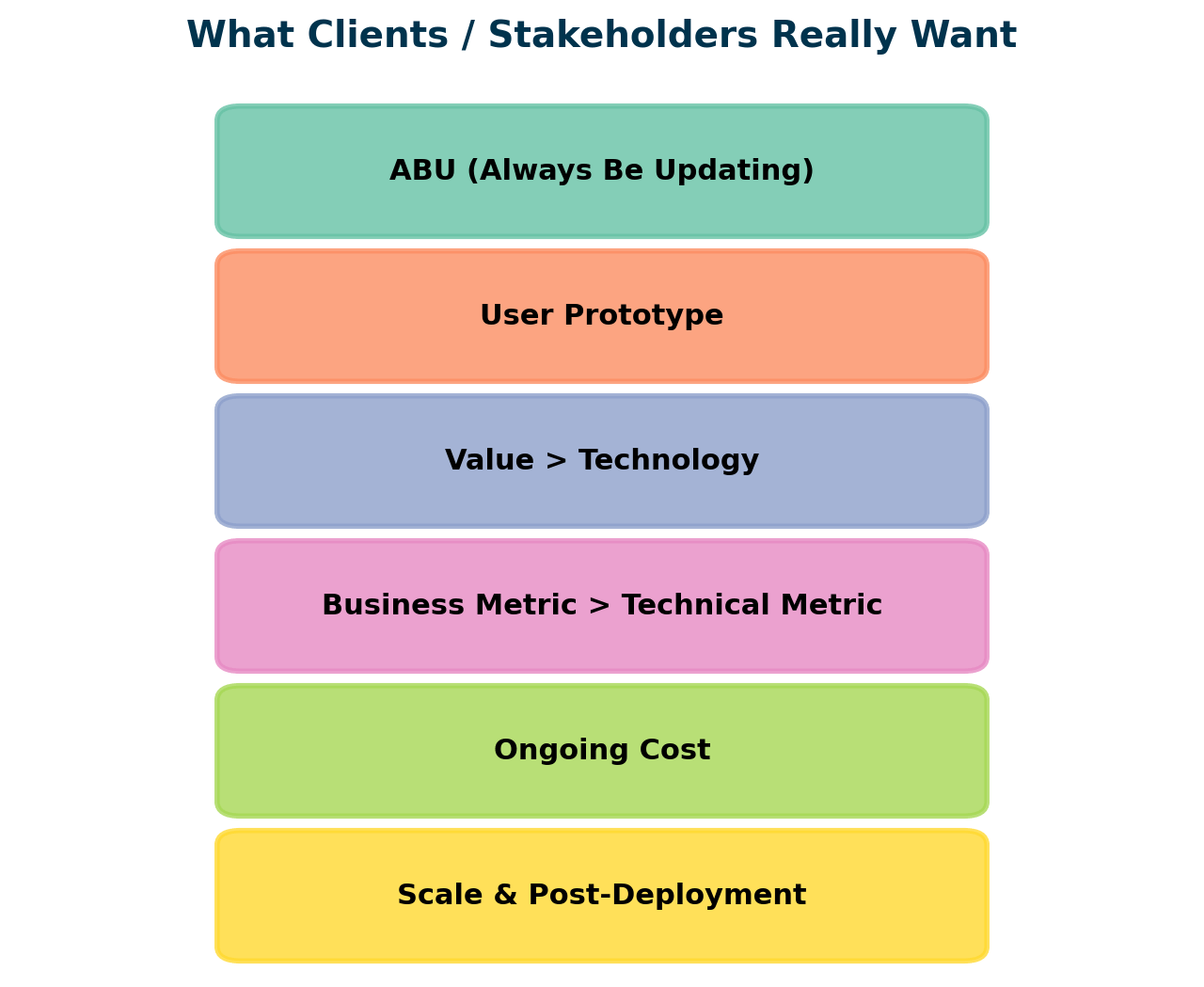

, clients and stakeholders don’t want surprises.

What they expect is clarity, consistent communication, and transparency. They want results, but they also want you to stay grounded and aligned with the project’s goals as a developer or product manager. Just as important, they want full visibility into the process.

In this blog post, I’ll share practical principles and tips to help keep AI projects on track. These insights come from over 15 years of managing and deploying AI initiatives and is a follow up on my blog post “Tips for setting expectations in AI projects”.

When working with AI projects, uncertainty isn’t just a side effect, it can make or break the entire initiative.

Throughout the blog sections, I’ll include practical items you can put into action immediately.

Let’s dive in!

ABU (Always Be Updating)

In sales, there’s a famous rule called ABC — Always Be Closing. The idea is simple: every interaction should move the client closer to a deal. In AI projects, we have another motto: ABU (Always Be Updating).

This rule means exactly what it says: never leave stakeholders in the dark. Even when there’s little or no progress, you need to communicate it quickly. Silence creates uncertainty, and uncertainty kills trust.

A straightforward way to apply ABU is with a short weekly email to every stakeholder. Keep it consistent, concise, and focused on four key points:

- Breakthroughs in performance or key milestones achieved during the week;

- Issues with deliverables or changes to last week’s plan and that affect stakeholders’ expectations;

- Updates on the team or resources involved;

- Current progress on agreed success metrics;

This rhythm keeps everyone aligned without overwhelming them with noise. The key insight is that people don’t actually hate bad news, they just hate bad surprises. If you stick to ABU and manage expectations week by week, you build credibility and protect the project when challenges inevitably come up.

Put the Product in Front of the Users

In AI projects, it’s easy to fall into the trap of building for yourself instead of for the people who will actually use the product/solution you are building.

Too often, I’ve seen teams get excited about features that matter to them but mean little to the end user.

So, don’t assume anything. Put the product in front of users as early and as often as possible. Real feedback is irreplaceable.

A practical way to do this is through lightweight prototypes or limited pilots. Even if the product is far from finished, showing it to users helps you test assumptions and prioritize features. When you start the project, commit to a prototype date as soon as possible.

Don’t fall into the technology trap

Engineers love technology — it’s part of the passion for the role. But in AI projects, technology is only an enabler, never the end goal. Just because something is technically possible (or looks impressive in a demo) doesn’t mean it solves the real problems of your customers or stakeholders.

So the guideline is very simple, yet difficult to follow: Don’t start with the tech, start with the need. Every function or code should trace back to a clear user problem.

A practical way to apply this principle is to validate problems before solutions. Spend time with customers, map their pain points, and ask: “If this technology worked perfectly, would it actually matter to them?”

Cool features won’t save a product that doesn’t solve a problem. But when you anchor technology in real needs, adoption follows naturally.

Engineers often focus on optimizing technology or building cool features. But the best engineers (10x engineers) combine that technical strength with the rare ability to empathize with stakeholders.

Business Metrics Over Technical Metrics

It’s easy to get lost in technical metrics — accuracy, F1 score, ROC-AUC, precision, recall. Clients and stakeholders normally don’t care if your model is 0.5% more accurate, they care if it reduces churn, increases revenue, or saves time and costs. The worst part is that clients and stakeholders often believe technical metrics are what matter, when in a business context they rarely are. And it’s on you to convince them otherwise.

If your churn prediction model hits 92% accuracy, but the marketing team can’t design effective campaigns from its outputs, the metric means nothing. On the other hand, if a “less accurate” model helps reduce customer churn by 10% because it’s explainable, that’s a success.

A practical way to apply this is to define business metrics at the start of the project — ask:

- What’s the financial or operational goal? (example: reduce call center handling time by 20%)

- Which technical metrics best correlate with that outcome?

- How will we communicate results to non-technical stakeholders?

Sometimes the right metric isn’t accuracy at all. For example, in fraud detection, catching 70% of fraud cases with minimal false positives might be more valuable than a model that squeezes out 90% but blocks thousands of legitimate transactions.

Ownership and Handover

Who owns the solution once it goes live? In case of success, will the client have reliable access to it at all times? What happens when your team is no longer working on the project?

These questions often get passed on, but they define the long-term impact of your work. You need to plan for handover from day one. That means documenting processes, transferring knowledge, and ensuring the client’s team can maintain and operate the model without your constant involvement.

Delivering an ML model is only half the job — post-deployment is often an important phase that gets lost in translation between business and tech.

Cost and Budget Visibility

How much will the solution cost to run? Are you using cloud infrastructure, LLMs, or other techniques that carry variable expenses the customer must understand?

From the start, you need to give stakeholders full visibility on cost drivers. This means breaking down infrastructure costs, licensing fees, and, especially with GenAI, usage expenses like token consumption.

A practical way to manage this is to set up clear cost-tracking dashboards or alerts and review them regularly with the client. For LLMs, estimate expected token usage under different scenarios (average query vs. heavy use) so there are no surprises later.

Clients can accept costs, but they won’t accept hidden or multi-scalable costs. Transparency on budget allows clients to plan realistically for scaling the solution.

Scale

Speaking about scale..

Scale is a different game altogether. It’s the stage where an AI solution can deliver the most business value, but also where most projects fail. Building a model in a notebook is one thing, but deploying it to handle real-world traffic, data, and user demands is another.

So be clear about how you will scale your solution. This is where data engineering and MLOps come. Address the topicss related to ensuring the entire pipeline (data ingestion, model training, deployment, monitoring) can grow with demand while staying reliable and cost-efficient.

Some critical areas to consider when communicating scale are:

- Software engineering practices: Version control, CI/CD pipelines, containerization, and automated testing to ensure your solution can evolve without breaking.

- MLOps capabilities: Automated retraining, monitoring for data drift and concept drift, and alerting systems that keep the model accurate over time.

- Infrastructure choices: Cloud vs. on-premises, horizontal scaling, cost controls, and whether you need specialized hardware.

An AI solution / project that performs well in isolation is not enough. Real value comes when the solution can scale to thousands of users, adapt to new data, and continue delivering business impact long after the initial deployment.

Here are the practical tips we’ve seen in this post:

- Send a short weekly email to all stakeholders with breakthroughs, issues, team updates, and progress on metrics.

- Commit to an early prototype or pilot to test assumptions with end users.

- Validate problems first — don’t start with tech, start with user needs. User interviews are a great way to do this (if possible, get out of your desk and join the users on whatever job they are doing during one day).

- Define business metrics upfront and tie technical progress back to them.

- Plan for handover from day one: document, train the client team, and ensure ownership is clear.

- Set up a dashboard or alerts to track costs (especially for cloud and token-based GenAI solutions).

- Build with scalability in mind: CI/CD, monitoring for drift, modular pipelines, and infrastructure that can grow.

Any other tip you find relevant to share? Write it down in the comments or feel free to contact me via LinkedIn!

Source link

#Clients #Projects