before we dive in:

- I am a developer at Google Cloud. Thoughts and opinions expressed here are entirely my own.

- The complete source code for this article, including future updates, is available in this notebook under the Apache 2.0 license.

- All new images in this article were generated with Gemini Nano Banana using the proof-of-concept generation pipeline explored here.

- You can experiment with Gemini for free in Google AI Studio. Please note that programmatic API access to Nano Banana is a pay-as-you-go service.

🔥 Challenge

We all have existing images worth reusing in different contexts. This would generally imply modifying the images, a complex (if not impossible) task requiring very specific skills and tools. This explains why our archives are full of forgotten or unused treasures. State-of-the-art vision models have evolved so much that we can reconsider this problem.

So, can we breathe new life into our visual archives?

Let’s try to complete this challenge with the following steps:

- 1️⃣ Start from an archive image we’d like to reuse

- 2️⃣ Extract a character to create a brand-new reference image

- 3️⃣ Generate a series of images to illustrate the character’s journey, using only prompts and the new assets

For this, we’ll explore the capabilities of “Gemini 2.5 Flash Image”, also known as “Nano Banana” 🍌.

🏁 Setup

🐍 Python packages

We’ll use the following packages:

google-genai: The Google Gen AI Python SDK lets us call Gemini with a few lines of codenetworkxfor graph management

We’ll also use the following dependencies:

pillowandmatplotlibfor data visualizationtenacityfor request management

%pip install --quiet "google-genai>=1.38.0" "networkx[default]"🤖 Gen AI SDK

Create a google.genai client:

from google import genai

check_environment()

client = genai.Client()Check your configuration:

check_configuration(client)Using the Vertex AI API with project "…" in location "global"🧠 Gemini model

For this challenge, we’ll select the latest Gemini 2.5 Flash Image model (currently in preview):

GEMINI_2_5_FLASH_IMAGE = "gemini-2.5-flash-image-preview"

💡 “Gemini 2.5 Flash Image” is also known as “Nano Banana” 🍌

🛠️ Helpers

Define some helper functions to generate and display images: 🔽

import IPython.display

import tenacity

from google.genai.errors import ClientError

from google.genai.types import GenerateContentConfig, PIL_Image

GEMINI_2_5_FLASH_IMAGE = "gemini-2.5-flash-image-preview"

GENERATION_CONFIG = GenerateContentConfig(response_modalities=["TEXT", "IMAGE"])

def generate_content(sources: list[PIL_Image], prompt: str) -> PIL_Image | None:

prompt = prompt.strip()

contents = [*sources, prompt] if sources else prompt

response = None

for attempt in get_retrier():

with attempt:

response = client.models.generate_content(

model=GEMINI_2_5_FLASH_IMAGE,

contents=contents,

config=GENERATION_CONFIG,

)

if not response or not response.candidates:

return None

if not (content := response.candidates[0].content):

return None

if not (parts := content.parts):

return None

image: PIL_Image | None = None

for part in parts:

if part.text:

display_markdown(part.text)

continue

assert (sdk_image := part.as_image())

assert (image := sdk_image._pil_image)

display_image(image)

return image

def get_retrier() -> tenacity.Retrying:

return tenacity.Retrying(

stop=tenacity.stop_after_attempt(7),

wait=tenacity.wait_incrementing(start=10, increment=1),

retry=should_retry_request,

reraise=True,

)

def should_retry_request(retry_state: tenacity.RetryCallState) -> bool:

if not retry_state.outcome:

return False

err = retry_state.outcome.exception()

if not isinstance(err, ClientError):

return False

print(f"❌ ClientError {err.code}: {err.message}")

retry = False

match err.code:

case 400 if err.message is not None and " try again " in err.message:

# Workshop: Cloud Storage accessed for the first time (service agent provisioning)

retry = True

case 429:

# Workshop: temporary project with 1 QPM quota

retry = True

print(f"🔄 Retry: {retry}")

return retry

def display_markdown(markdown: str) -> None:

IPython.display.display(IPython.display.Markdown(markdown))

def display_image(image: PIL_Image) -> None:

IPython.display.display(image)🖼️ Assets

Let’s define the assets for our character’s journey and the functions to manage them:

import enum

from collections.abc import Sequence

from dataclasses import dataclass

class AssetId(enum.StrEnum):

ARCHIVE = "0_archive"

ROBOT = "1_robot"

MOUNTAINS = "2_mountains"

VALLEY = "3_valley"

FOREST = "4_forest"

CLEARING = "5_clearing"

ASCENSION = "6_ascension"

SUMMIT = "7_summit"

BRIDGE = "8_bridge"

HAMMOCK = "9_hammock"

@dataclass

class Asset:

id: str

source_ids: Sequence[str]

prompt: str

pil_image: PIL_Image

class Assets(dict[str, Asset]):

def set_asset(self, asset: Asset) -> None:

# Note: This replaces any existing asset (if needed, add guardrails to auto-save|keep all versions)

self[asset.id] = asset

def generate_image(source_ids: Sequence[str], prompt: str, new_id: str = "") -> None:

sources = [assets[source_id].pil_image for source_id in source_ids]

prompt = prompt.strip()

image = generate_content(sources, prompt)

if image and new_id:

assets.set_asset(Asset(new_id, source_ids, prompt, image))

assets = Assets()📦 Reference archive

We can now fetch our reference archive and make it our first asset: 🔽

import urllib.request

import PIL.Image

import PIL.ImageOps

ARCHIVE_URL = "https://storage.googleapis.com/github-repo/generative-ai/gemini/use-cases/media-generation/consistent_imagery_generation/0_archive.png"

def load_archive() -> None:

image = get_image_from_url(ARCHIVE_URL)

# Keep original details in 16:9 landscape aspect ratio (arbitrary)

image = crop_expand_if_needed(image, 1344, 768)

assets.set_asset(Asset(AssetId.ARCHIVE, [], "", image))

display_image(image)

def get_image_from_url(image_url: str) -> PIL_Image:

with urllib.request.urlopen(image_url) as response:

return PIL.Image.open(response)

def crop_expand_if_needed(image: PIL_Image, dst_w: int, dst_h: int) -> PIL_Image:

src_w, src_h = image.size

if dst_w load_archive()

💡 Gemini will preserve the closest aspect ratio of the last input image. Consequently, we cropped the archive image to

1344 × 768pixels (close to16:9) to preserve the original details (no rescaling) and keep the same landscape resolution in all our future scenes. Gemini can generate1024 × 1024images (1:1) but also their16:9,9:16,4:3, and3:4equivalents (in terms of tokens).

This archive image was generated in July 2024 with a beta version of Imagen 3, prompted with “On white background, a small hand-felted toy of blue robot. The felt is soft and cuddly…”. The result looked really good but, at the time, there was absolutely no determinism and no consistency. As a result, this was a nice one-shot image generation and the cute little robot seemed gone forever…

Let’s try to extract our little robot:

source_ids = [AssetId.ARCHIVE]

prompt = "Extract the robot as is, without its shadow, replacing everything with a solid white fill."

generate_image(source_ids, prompt)

⚠️ The robot is perfectly extracted, but this is essentially a good background removal, which many models can perform. This prompt uses terms from graphics software, whereas we can now reason in terms of image composition. It’s also not necessarily a good idea to try to use traditional binary masks, as object edges and shadows convey significant details about shapes, textures, positions, and lighting.

Let’s go back to our archive to perform an advanced extraction instead, and directly generate a character sheet…

🪄 Character sheet

Gemini has spatial understanding, so it’s able to provide different views while preserving visual features. Let’s generate a front/back character sheet and, as our little robot will go on a journey, also add a backpack at the same time:

source_ids = [AssetId.ARCHIVE]

prompt = """

- Scene: Robot character sheet.

- Left: Front view of the extracted robot.

- Right: Back view of the extracted robot (seamless back).

- The robot wears a same small, brown-felt backpack, with a tiny polished-brass buckle and simple straps in both views. The backpack straps are visible in both views.

- Background: Pure white.

- Text: On the top, caption the image "ROBOT CHARACTER SHEET" and, on the bottom, caption the views "FRONT VIEW" and "BACK VIEW".

"""

new_id = AssetId.ROBOT

generate_image(source_ids, prompt, new_id)

💡 A few remarks:

- The prompt describes the scene in terms of composition, as commonly used in media studios.

- If we try successive generations, they are consistent, with all robot features preserved.

- Our prompt does detail some aspects of the backpack, but we’ll get slightly different backpacks for everything that’s unspecified.

- For the sake of simplicity, we added the backpack directly in the character sheet but, in a real production pipeline, we would probably make it part of a separate accessory sheet.

- To control exactly the backpack shape and design, we could also use a reference photo and “transform the backpack into a stylized felt version”.

This new asset can now serve as a design reference in our future image generations.

✨ First scene

Let’s get started with a mountain scenery:

source_ids = [AssetId.ROBOT]

prompt = """

- Image 1: Robot character sheet.

- Scene: Macro photography of a beautifully crafted miniature diorama.

- Background: Soft-focus of a panoramic range of interspersed, dome-like felt mountains, in various shades of medium blue/green, with curvy white snowcaps, extending over the entire horizon.

- Foreground: In the bottom-left, the robot stands on the edge of a medium-gray felt cliff, viewed from a 3/4 back angle, looking out over a sea of clouds (made of white cotton).

- Lighting: Studio, clean and soft.

"""

new_id = AssetId.MOUNTAINS

generate_image(source_ids, prompt, new_id)

💡 The mountain shape is specified as “dome-like” so our character can stand on one of the summits later on.

It’s important to spend some time on this first scene as, in a cascading effect, it will define the overall look of our story. Take some time to refine the prompt or try a couple of times to get the best variation.

From now on, our generation inputs will be both the character sheet and a reference scene…

✨ Successive scenes

Let’s get the robot down a valley:

source_ids = [AssetId.ROBOT, AssetId.MOUNTAINS]

prompt = """

- Image 1: Robot character sheet.

- Image 2: Previous scene.

- The robot has descended from the cliff to a gray felt valley. It stands in the center, seen directly from the back. It is holding/reading a felt map with outstretched arms.

- Large smooth, round, felt rocks in various beige/gray shades are visible on the sides.

- Background: The distant mountain range. A thin layer of clouds obscures its base and the end of the valley.

- Lighting: Golden hour light, soft and diffused.

"""

new_id = AssetId.VALLEY

generate_image(source_ids, prompt, new_id)

💡 A few notes:

- The provided specifications about our input images (

"Image 1:…","Image 2:…") are important. Without them, “the robot” could refer to any of the 3 robots in the input images (2 in the character sheet, 1 in the previous scene). With them, we indicate that it’s the same robot. In case of confusion, we can be more specific with"the [entity] from image [number]". - On the other hand, since we didn’t provide a precise description of the valley, successive requests will give different, interesting, and creative results (we can pick our favorite or make the prompt more precise for more determinism).

- Here, we also tested a different lighting, which significantly changes the whole scene.

Then, we can move forward into this scene:

source_ids = [AssetId.ROBOT, AssetId.VALLEY]

prompt = """

- Image 1: Robot character sheet.

- Image 2: Previous scene.

- The robot goes on and faces a dense, infinite forest of simple, giant, thin trees, that fills the entire background.

- The trees are made from various shades of light/medium/dark green felt.

- The robot is on the right, viewed from a 3/4 rear angle, no longer holding the map, with both hands clasped to its ears in despair.

- On the left & right bottom sides, rocks (similar to image 2) are partially visible.

"""

new_id = AssetId.FOREST

generate_image(source_ids, prompt, new_id)

💡 Of interest:

- We could position the character, change its point of view, and even “animate” its arms for more expressivity.

- The “no longer holding the map” precision prevents the model from trying to keep it from the previous scene in a meaningful way (e.g., the robot dropped the map on the floor).

- We didn’t provide lighting details: The lighting source, quality, and direction have been kept from the previous scene.

Let’s go through the forest:

source_ids = [AssetId.ROBOT, AssetId.FOREST]

prompt = """

- Image 1: Robot character sheet.

- Image 2: Previous scene.

- The robot goes through the dense forest and emerges into a clearing, pushing aside two tree trunks.

- The robot is in the center, now seen from the front view.

- The ground is made of green felt, with flat patches of white felt snow. Rocks are no longer visible.

"""

new_id = AssetId.CLEARING

generate_image(source_ids, prompt, new_id)

💡 We changed the ground but didn’t provide additional details for the view and the forest: The model will generally preserve most of the trees.

Now that the valley-forest sequence is over, we can journey up to the mountains, using the original mountain scene as our reference to return to that environment:

source_ids = [AssetId.ROBOT, AssetId.MOUNTAINS]

prompt = """

- Image 1: Robot character sheet.

- Image 2: Previous scene.

- Close-up of the robot now climbing the peak of a medium-green mountain and reaching its summit.

- The mountain is right in the center, with the robot on its left slope, viewed from a 3/4 rear angle.

- The robot has both feet on the mountain and is using two felt ice axes (brown handles, gray heads), reaching the snowcap.

- Horizon: The distant mountain range.

"""

new_id = AssetId.ASCENSION

generate_image(source_ids, prompt, new_id)

💡 The mountain close-up, inferred from the blurred background, is pretty impressive.

Let’s climb to the summit:

source_ids = [AssetId.ROBOT, AssetId.ASCENSION]

prompt = """

- Image 1: Robot character sheet.

- Image 2: Previous scene.

- The robot reaches the top and stands on the summit, seen in the front view, in close-up.

- It is no longer holding the ice axes, which are planted upright in the snow on each side.

- It has both arms raised in sign of victory.

"""

new_id = AssetId.SUMMIT

generate_image(source_ids, prompt, new_id)

💡 This is a logical follow-up but also a nice, different view.

Now, let’s try something different to significantly recompose the scene:

source_ids = [AssetId.ROBOT, AssetId.SUMMIT]

prompt = """

- Image 1: Robot character sheet.

- Image 2: Previous scene.

- Remove the ice axes.

- Move the center mountain to the left edge of the image and add a slightly taller medium-blue mountain to the right edge.

- Suspend a stylized felt bridge between the two mountains: Its deck is made of thick felt planks in various wood shades.

- Place the robot on the center of the bridge with one arm pointing toward the blue mountain.

- View: Close-up.

"""

new_id = AssetId.BRIDGE

generate_image(source_ids, prompt, new_id)

💡 Of interest:

- This imperative prompt composes the scene in terms of actions. It’s sometimes easier than descriptions.

- A new mountain is added as instructed, and it is both different and consistent.

- The bridge attaches to the summits in very plausible ways and seems to obey the laws of physics.

- The “Remove the ice axes” instruction is here for a reason. Without it, it’s as if we were prompting “do whatever you can with the ice axes from the previous scene: leave them where they are, don’t let the robot leave without them, or anything else”, leading to random results.

- It’s also possible to get the robot to walk on the bridge, seen from the side (which we never generated before), but it’s hard to have it consistently walk from left to right. Adding left and right views in the character sheet should fix this.

Let’s generate a final scene and let the robot get some well-deserved rest:

source_ids = [AssetId.ROBOT, AssetId.BRIDGE]

prompt = """

- Image 1: Robot character sheet.

- Image 2: Previous scene.

- The robot is sleeping peacefully (both eyes changed into a "closed" state), in a comfortable brown-and-tan tartan hammock that has replaced the bridge.

"""

new_id = AssetId.HAMMOCK

generate_image(source_ids, prompt, new_id)

💡 Of interest:

- This time, the prompt is descriptive, and it works as well as the previous imperative prompt.

- The bridge-hammock transformation is really nice and preserves the attachments on the mountain summits.

- The robot transformation is also impressive, as it hasn’t been seen in this position before.

- The closed eyes are the most difficult detail to get consistently (may require a couple of attempts), probably because we’re accumulating many different transformations at once (and diluting the model’s attention). For full control and more deterministic results, we can focus on significant changes over iterative steps, or create various character sheets upfront.

We have illustrated our story with 9 new consistent images! Let’s take a step back to understand what we’ve built…

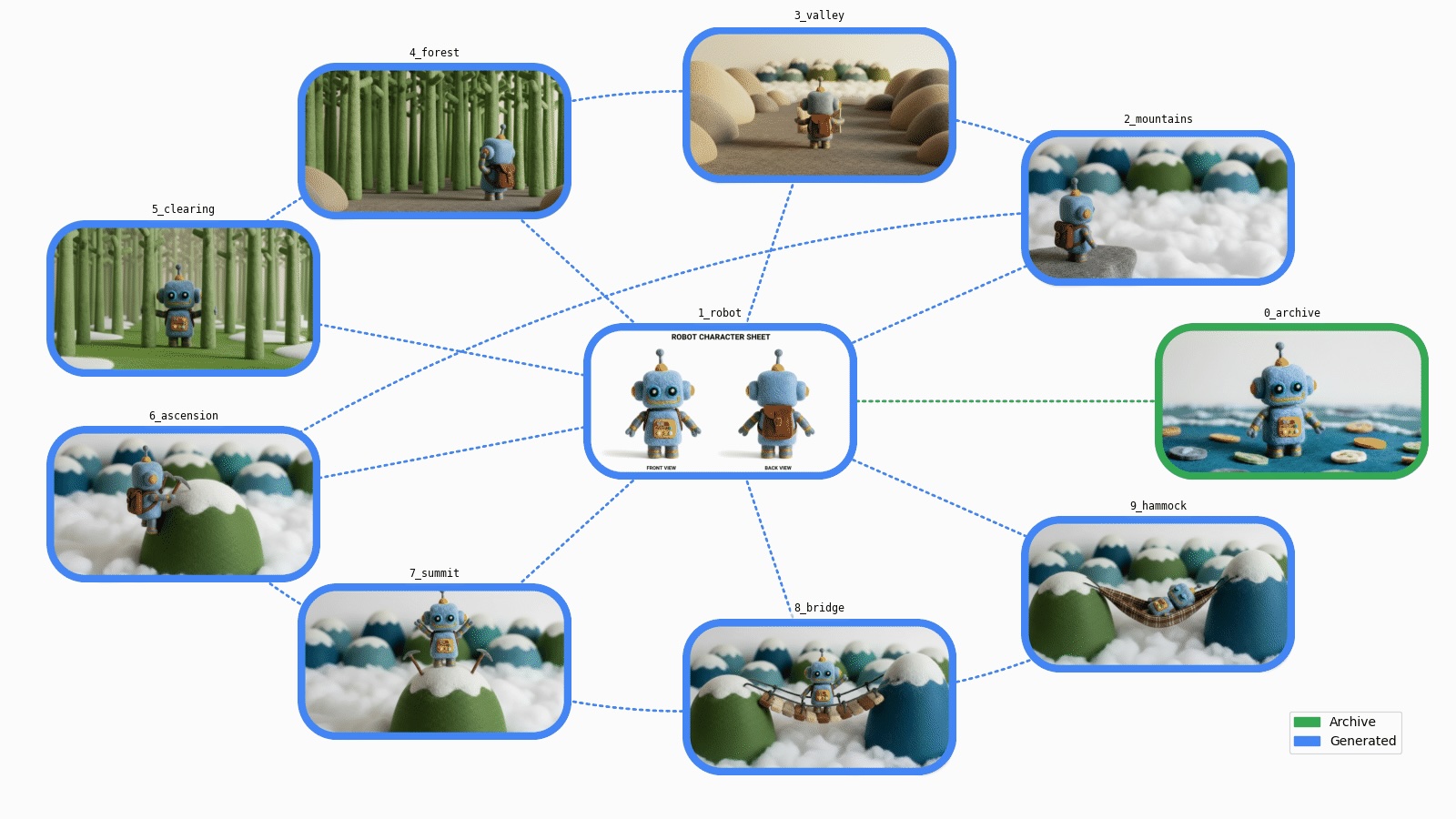

🗺️ Graph visualization

We now have a collection of image assets, from archives to brand-new generated assets.

Let’s add some data visualization to get a better sense of the steps completed…

🔗 Directed graph

Our new assets are all related, connected by one or more “generated from” links. From a data structure point of view, this is a directed graph.

We can build the corresponding directed graph using the networkx library:

import networkx as nx

def build_graph(assets: Assets) -> nx.DiGraph:

graph = nx.DiGraph(assets=assets)

# Nodes

for asset in assets.values():

graph.add_node(asset.id, asset=asset)

# Edges

for asset in assets.values():

for source_id in asset.source_ids:

graph.add_edge(source_id, asset.id)

return graph

asset_graph = build_graph(assets)

print(asset_graph)DiGraph with 10 nodes and 16 edgesLet’s place the most used asset in the center and display the other assets around: 🔽

import matplotlib.pyplot as plt

def display_basic_graph(graph: nx.Graph) -> None:

pos = compute_node_positions(graph)

color = "#4285F4"

options = dict(

node_color=color,

edge_color=color,

arrowstyle="wedge",

with_labels=True,

font_size="small",

bbox=dict(ec="black", fc="white", alpha=0.7),

)

nx.draw(graph, pos, **options)

plt.show()

def compute_node_positions(graph: nx.Graph) -> dict[str, tuple[float, float]]:

# Put the most connected node in the center

center_node = most_connected_node(graph)

edge_nodes = set(graph) - {center_node}

pos = nx.circular_layout(graph.subgraph(edge_nodes))

pos[center_node] = (0.0, 0.0)

return pos

def most_connected_node(graph: nx.Graph) -> str:

if not graph.nodes():

return ""

centrality_by_id = nx.degree_centrality(graph)

return max(centrality_by_id, key=lambda s: centrality_by_id.get(s, 0.0))display_basic_graph(asset_graph)

That’s a correct summary of our different steps. It’d be nice if we could also visualize our assets…

🌟 Asset graph

Let’s add custom matplotlib functions to render the graph nodes with the assets in a more visually appealing way: 🔽

import typing

from collections.abc import Iterator

from io import BytesIO

from pathlib import Path

import PIL.Image

import PIL.ImageDraw

from google.genai.types import PIL_Image

from matplotlib.axes import Axes

from matplotlib.backends.backend_agg import FigureCanvasAgg

from matplotlib.figure import Figure

from matplotlib.image import AxesImage

from matplotlib.patches import Patch

from matplotlib.text import Annotation

from matplotlib.transforms import Bbox, TransformedBbox

@enum.unique

class ImageFormat(enum.StrEnum):

# Matches PIL.Image.Image.format

WEBP = enum.auto()

PNG = enum.auto()

GIF = enum.auto()

def yield_generation_graph_frames(

graph: nx.DiGraph,

animated: bool,

) -> Iterator[PIL_Image]:

def get_fig_ax() -> tuple[Figure, Axes]:

factor = 1.0

figsize = (16 * factor, 9 * factor)

fig, ax = plt.subplots(figsize=figsize)

fig.tight_layout(pad=3)

handles = [

Patch(color=COL_OLD, label="Archive"),

Patch(color=COL_NEW, label="Generated"),

]

ax.legend(handles=handles, loc="lower right")

ax.set_axis_off()

return fig, ax

def prepare_graph() -> None:

arrows = nx.draw_networkx_edges(graph, pos, ax=ax)

for arrow in arrows:

arrow.set_visible(False)

def get_box_size() -> tuple[float, float]:

xlim_l, xlim_r = ax.get_xlim()

ylim_t, ylim_b = ax.get_ylim()

factor = 0.08

box_w = (xlim_r - xlim_l) * factor

box_h = (ylim_b - ylim_t) * factor

return box_w, box_h

def add_axes() -> Axes:

xf, yf = tr_figure(pos[node])

xa, ya = tr_axes([xf, yf])

x_y_w_h = (xa - box_w / 2.0, ya - box_h / 2.0, box_w, box_h)

a = plt.axes(x_y_w_h)

a.set_title(

asset.id,

loc="center",

backgroundcolor="#FFF8",

fontfamily="monospace",

fontsize="small",

)

a.set_axis_off()

return a

def draw_box(color: str, image: bool) -> AxesImage:

if image:

result = pil_image.copy()

else:

result = PIL.Image.new("RGB", image_size, color="white")

xy = ((0, 0), image_size)

# Draw box outline

draw = PIL.ImageDraw.Draw(result)

draw.rounded_rectangle(xy, box_r, outline=color, width=outline_w)

# Make everything outside the box outline transparent

mask = PIL.Image.new("L", image_size, 0)

draw = PIL.ImageDraw.Draw(mask)

draw.rounded_rectangle(xy, box_r, fill=0xFF)

result.putalpha(mask)

return a.imshow(result)

def draw_prompt() -> Annotation:

text = f"Prompt:\n{asset.prompt}"

margin = 2 * outline_w

image_w, image_h = image_size

bbox = Bbox([[0, margin], [image_w - margin, image_h - margin]])

clip_box = TransformedBbox(bbox, a.transData)

return a.annotate(

text,

xy=(0, 0),

xytext=(0.06, 0.5),

xycoords="axes fraction",

textcoords="axes fraction",

verticalalignment="center",

fontfamily="monospace",

fontsize="small",

linespacing=1.3,

annotation_clip=True,

clip_box=clip_box,

)

def draw_edges() -> None:

STYLE_STRAIGHT = "arc3"

STYLE_CURVED = "arc3,rad=0.15"

for parent in graph.predecessors(node):

edge = (parent, node)

color = COL_NEW if assets[parent].prompt else COL_OLD

style = STYLE_STRAIGHT if center_node in edge else STYLE_CURVED

nx.draw_networkx_edges(

graph,

pos,

[edge],

width=2,

edge_color=color,

style="dotted",

ax=ax,

connectionstyle=style,

)

def get_frame() -> PIL_Image:

canvas = typing.cast(FigureCanvasAgg, fig.canvas)

canvas.draw()

image_size = canvas.get_width_height()

image_bytes = canvas.buffer_rgba()

return PIL.Image.frombytes("RGBA", image_size, image_bytes).convert("RGB")

COL_OLD = "#34A853"

COL_NEW = "#4285F4"

assets = graph.graph["assets"]

center_node = most_connected_node(graph)

pos = compute_node_positions(graph)

fig, ax = get_fig_ax()

prepare_graph()

box_w, box_h = get_box_size()

tr_figure = ax.transData.transform # Data → display coords

tr_axes = fig.transFigure.inverted().transform # Display → figure coords

for node, data in graph.nodes(data=True):

if animated:

yield get_frame()

# Edges and sub-plot

asset = data["asset"]

pil_image = asset.pil_image

image_size = pil_image.size

box_r = min(image_size) * 25 / 100 # Radius for rounded rect

outline_w = min(image_size) * 5 // 100

draw_edges()

a = add_axes() # a is used in sub-functions

# Prompt

if animated and asset.prompt:

box = draw_box(COL_NEW, image=False)

prompt = draw_prompt()

yield get_frame()

box.set_visible(False)

prompt.set_visible(False)

# Generated image

color = COL_NEW if asset.prompt else COL_OLD

draw_box(color, image=True)

plt.close()

yield get_frame()

def draw_generation_graph(

graph: nx.DiGraph,

format: ImageFormat,

) -> BytesIO:

frames = list(yield_generation_graph_frames(graph, animated=False))

assert len(frames) == 1

frame = frames[0]

params: dict[str, typing.Any] = dict()

match format:

case ImageFormat.WEBP:

params.update(lossless=True)

image_io = BytesIO()

frame.save(image_io, format, **params)

return image_io

def draw_generation_graph_animation(

graph: nx.DiGraph,

format: ImageFormat,

) -> BytesIO:

frames = list(yield_generation_graph_frames(graph, animated=True))

assert 1 None:

if format is None:

format = ImageFormat.WEBP if running_in_colab_env else ImageFormat.PNG

if animated:

image_io = draw_generation_graph_animation(graph, format)

else:

image_io = draw_generation_graph(graph, format)

image_bytes = image_io.getvalue()

IPython.display.display(IPython.display.Image(image_bytes))

if save_image:

stem = "graph_animated" if animated else "graph"

Path(f"./{stem}.{format.value}").write_bytes(image_bytes)We can now display our generation graph:

display_generation_graph(asset_graph)

🚀 Challenge completed

We managed to generate a full set of new consistent images with Nano Banana and learned a few things along the way:

- Images prove again that they are worth a thousand words: It’s now a lot easier to generate new images from existing ones and simple instructions.

- We can create or edit images just in terms of composition (letting us all become artistic directors).

- We can use descriptive or imperative instructions.

- The model’s spatial understanding allows 3D manipulations.

- We can add text in our outputs (character sheet) and also refer to text in our inputs (front/back views).

- Consistency can be preserved at very different levels: character, scene, texture, lighting, camera angle/type…

- The generation process can still be iterative but it feels like 10x-100x faster for reaching better-than-hoped-for results.

- It’s now possible to breathe new life into our archives!

Possible next steps:

- The process we followed is essentially a generation pipeline. It can be industrialized for automation (e.g., changing a node regenerates its descendants) or for the generation of different variations in parallel (e.g., the same set of images could be generated for different aesthetics, audiences, or simulations).

- For the sake of simplicity and exploration, the prompts are intentionally simple. In a production environment, they could have a fixed structure with a systematic set of parameters.

- We described scenes as if in a photo studio. Virtually any other imaginable artistic style is possible (photorealistic, abstract, 2D…).

- Our assets could be made self-sufficient by saving prompts and ancestors in the image metadata (e.g., in PNG chunks), allowing for full local storage and retrieval (no database needed and no more lost prompts!). For details, see the “asset metadata” section in the notebook (link below).

As a bonus, let’s end with an animated version of our journey, with the generation graph also showing a glimpse of our instructions:

display_generation_graph(asset_graph, animated=True)

➕ More!

Want to go deeper?

Thanks for reading. I look forward to seeing what you create!

Source link

#Generating #Consistent #Imagery #Gemini