Researchers from Meta and ETH Zurich have developed a software program system referred to as TouchInsight, which they are saying solves the issue of turning any floor right into a digital keyboard.

Textual content enter in VR and AR immediately is cumbersome, and considerably slower than on PCs and smartphones. Floating digital keyboards require awkwardly holding your arms up and slowly urgent one key at a time, offering no haptic suggestions and stopping you from resting your wrist. Quest headsets assist pairing a Bluetooth keyboard for full velocity typing, however this implies it’s a must to carry round a separate gadget bigger than the headset itself.

If headsets might flip any flat floor right into a digital keyboard, it will carry partial haptic suggestions and can help you relaxation your wrists, with out the necessity to carry round a bodily keyboard. Builders can technically already construct surface-locked digital keyboards on Quest immediately, through the use of hand monitoring and getting the person to faucet the floor to calibrate its place. However in follow, even the slightest deviation of the digital floor peak from the actual floor ends in an unacceptable error fee.

Meta confirmed off analysis in the direction of fixing this concept last year, with its CTO Andrew Bosworth saying he achieved nearly 120 phrases per minute (WPM). However final 12 months’s answer appeared to require a collection of fiducial markers on the desk, which can have been appearing as a sturdy dynamic calibration system. Plus, Meta did not reveal the error fee of that system.

In the TouchInsight paper, the six researchers say their new answer solely achieves a most of simply over 70 WPM, and a median of 37 WPM. The common individual varieties at round 40 WPM on a standard keyboard, whereas skilled typists attain between 70 and 120 WPM relying on their talent stage.

However crucially, TouchInsight apparently works with solely an ordinary Quest 3 headset, on any desk, with no markers or exterior {hardware} of any sort. And whereas Meta did not disclose the error fee for final 12 months’s system, the researchers say the common uncorrected error fee of TouchInsight is simply 2.9%.

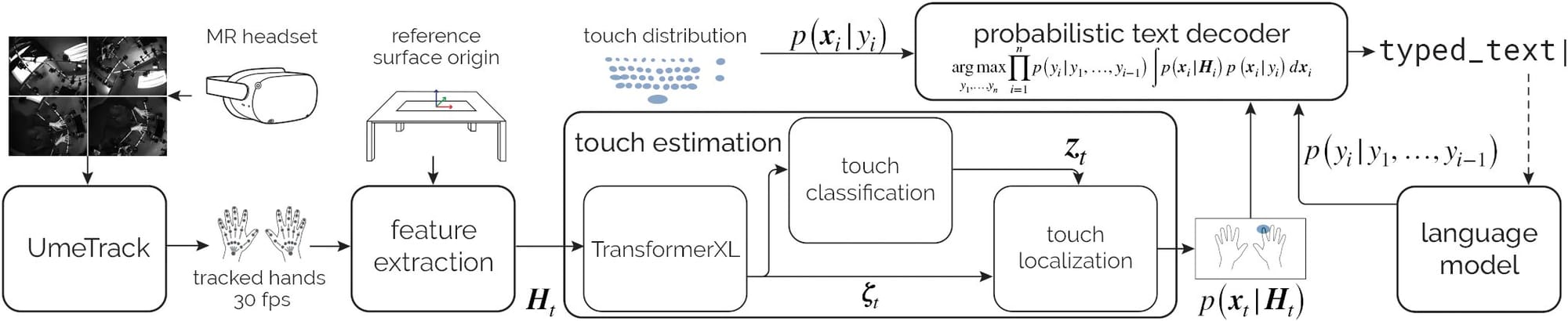

“On this paper, we current a real-time pipeline that detects contact enter from all ten fingers on any bodily floor, purely primarily based on selfish hand monitoring.

Our technique TouchInsight contains a neural community to foretell the second of a contact occasion, the finger making contact, and the contact location. TouchInsight represents areas via a bivariate Gaussian distribution to account for uncertainties because of sensing inaccuracies, which we resolve via contextual priors to precisely infer supposed person enter.”

TouchInsight may be used for normal detection of finger presses on bodily surfaces past simply typing. For instance, the researchers say, it might allow a combined actuality Whac-A-Mole sport the place you employ your fingers to squish tiny moles.

The researchers say they will demo TouchInsight on the ACM Symposium on Consumer Interface Software program and Expertise next week, in Pittsburgh.

It is unclear whether or not Meta plans to combine TouchInsight into Horizon OS any time quickly, and if not, what the precise limitations to doing so are.

Source link

#Researchers #Theyve #Solved #Turning #Floor #Keyboard

Unlock the potential of cutting-edge AI options with our complete choices. As a number one supplier within the AI panorama, we harness the ability of synthetic intelligence to revolutionize industries. From machine studying and knowledge analytics to pure language processing and laptop imaginative and prescient, our AI options are designed to boost effectivity and drive innovation. Discover the limitless prospects of AI-driven insights and automation that propel what you are promoting ahead. With a dedication to staying on the forefront of the quickly evolving AI market, we ship tailor-made options that meet your particular wants. Be part of us on the forefront of technological development, and let AI redefine the way in which you use and achieve a aggressive panorama. Embrace the long run with AI excellence, the place prospects are limitless, and competitors is surpassed.