- Steering vectors provide evidence that linear directions in LLMs are interpretable. Since SAEs decompose linear directions, they should be able to interpret steering vectors.

- We apply the gradient pursuit algorithm suggested by Smith et al to decompose steering vectors, and find that they contain many interpretable and promising-looking features. This builds off our prior work, which applies ITO and derivatives to steering vectors with less success.

- Notably, we find several abstract features in Phi-3 Mini for refusal and steer with linear combinations of these features. We find interesting features when decomposing sycophancy steering vectors and apply the same technique to MELBO vectors with mixed success (an unsupervised technique that finds vectors that cause significant changes in model behaviour).

- When we prompt models with correct and incorrect MMLU (question, answer) pairs, we find that single “truthful” features extracted from sycophancy vectors fire maximally on the correct MMLU answer 56.1% of the time (around Llama-2 13B level), not too far from Phi’s 68.8% accuracy.

In Sparse Feature Circuits, Marks et al. find features related to gender in a misgeneralizing classifier and ablate them. Their methods provide both insight about the way a classifier makes its decisions and a way to change its behavior: one of them, SHIFT, ablates features corresponding to gender to their mean activation and corrects the classifier’s generalization.

DeepMind’s SAE steering vector work looks at “wedding” and “anger” features. Their goal is to improve a steering vector (according to the original post’s metrics) by ablating interpretable and irrelevant features.

We want to follow both of their directions and find examples of interpretable SAE features which contain information about tasks performed and causally affect a model’s behavior.

We had some success applying inference-time Optimization (aka gradient pursuit) and its variants on simple in-context learning tasks in one of our previous posts. We applied Sparse Feature Circuits on the tasks and found interesting features (to be published to arXiv soon!). In this work, we want to explore the application of ITO and SAE encoding to previously studied steering vectors.

We want to look into abstract features such as those corresponding to refusal and ICL. Therefore, we need a model that can strongly perform such tasks.

We chose to train regularly-spaced residual stream SAEs like Improving Dictionary Learning with Gated Sparse Autoencoders. We only want to understand whether SAE dictionary features are causally important and find high-level causal variables using steering, not trace out complete circuits.

We trained 8 sets of SAEs and Gated SAEs on the residual stream at layers 8-28 of Phi 3 Mini (see Appendix B: Model choice for our reasons), mostly on even-numbered layers. We used OpenHermes, LaMini and data generated by the model for different runs. Models trained on synthetic data performed well on other datasets, though we are concerned because the synthetic data subjectively contains more repeated tokens in contexts than natural datasets. You can read about the way we trained them in Appendix A: Technical details.

We want to evaluate the quality of dictionaries learned by SAEs in three ways:

- How well can they represent arbitrary residual stream vectors with a few features?

- Can we use the features to tell what the original vector does?

- Can we intervene on identified features in a way informed by interpretations?

Refusal

We follow Arditi et al., (2024)’s refusal direction ablation procedure: we are looking for directions which, when ablated (projected to 0) from a model’s residual stream at all layers (a technique now popularly know as “abliteration”),[1] cause the model to stop refusing harmful requests while preserving capabilities (accuracy on MMLU).

Selecting a sequence position and layer for a refusal vector is not a trivial task, especially for a model as resistant to jailbreaks as Phi-3. We use an abliterated Phi-3 from failspy.[2] The author only published the ablated weights and not the vectors used to produce them. To extract the refusal direction, we find the difference between the first MLP weight matrices of base and abliterated models, perform an SVD, and take the second right singular vector (the first doesn’t work as a refusal direction; we suspect it corresponds to quantization error). This method is a bit questionable, but the results that follow show it was strongly related to refusal, so it works fine for demonstrating that SAEs can replicate directions that do interesting things to the model.

We can use Self-Explanation to see how the model understands the direction. Self-Explanation describes this direction as:

- “not interested” or “refusing to deal with a situation.”

- “not to count on,” “not to trust,” or “not to rely on.”

- “I’m sorry, I can’t assist with that.”

Large scales seem to explicitly activate the refusal behavior, i.e. the Self-Explanation request is refused.

Evaluating vectors and reconstructions

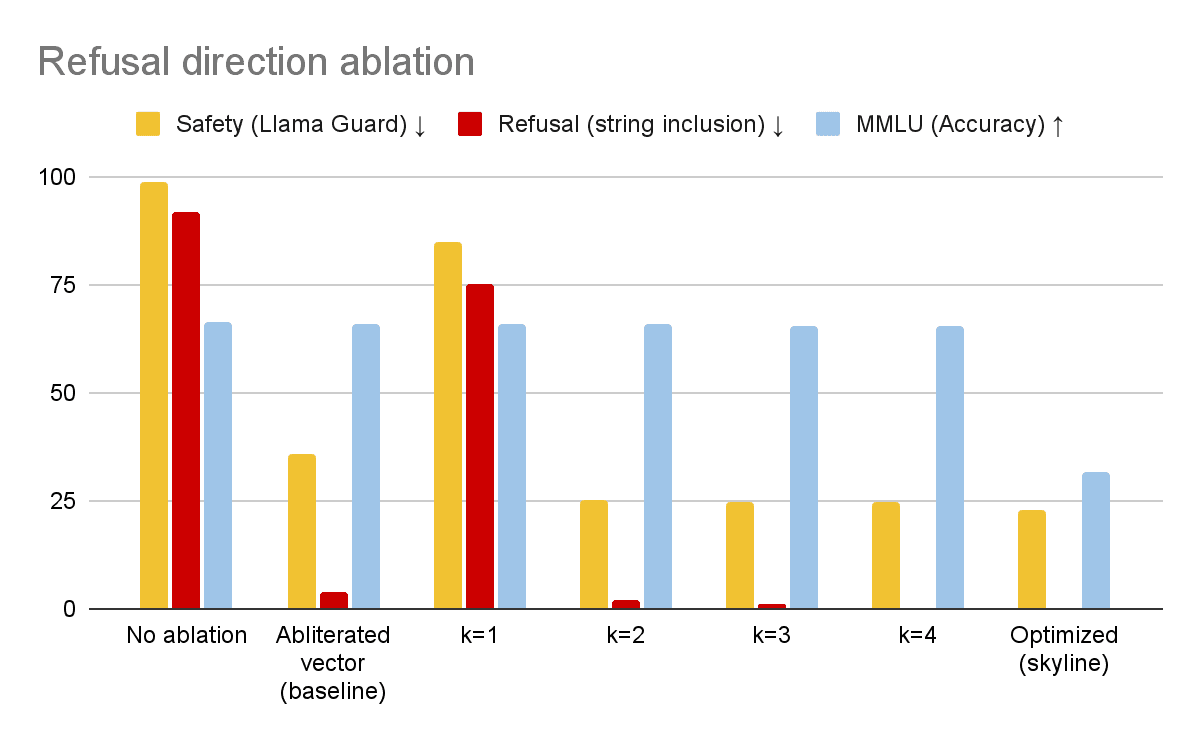

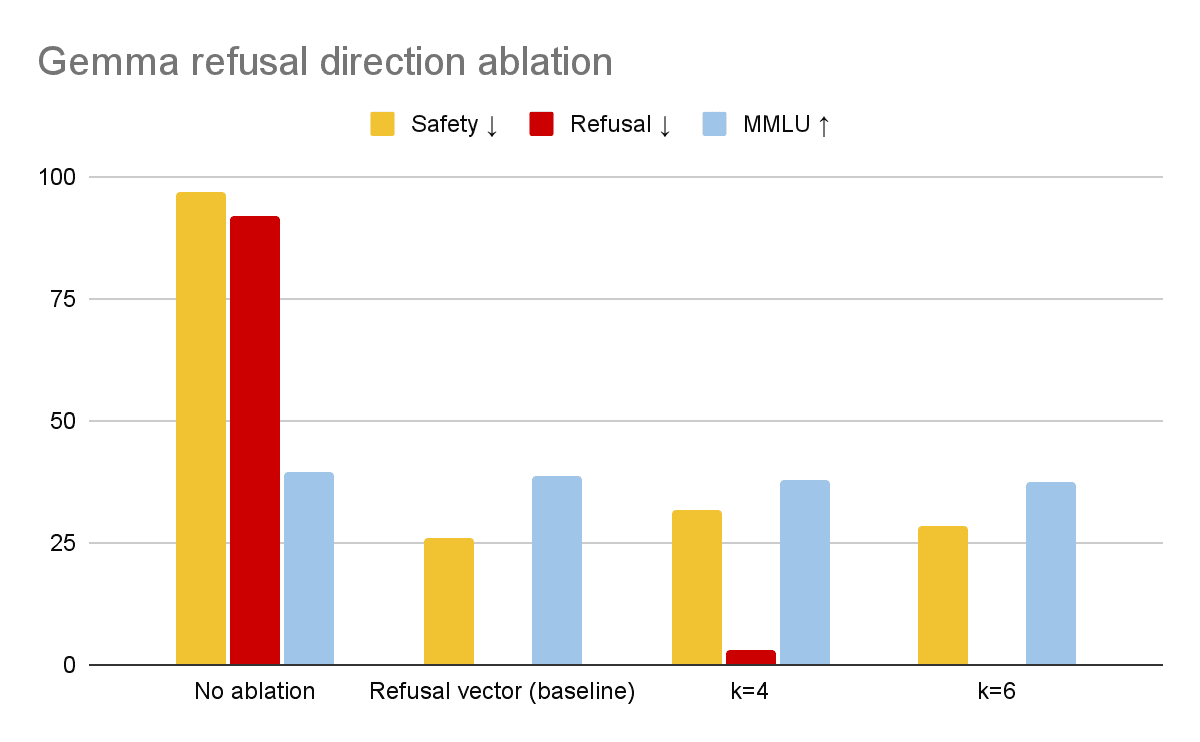

We evaluate refusal directions by ablating models and measuring the refusal and safety score of model generations on JailbreakBench. We use phrases “I’m sorry…”, “As an AI…” and “As a language…” to measure refusal and a locally run version of LLaMA Guard 2 to measure safety. As a skyline (i.e. opposite of baseline, an alternative method we would not expect to outcompete), we finetune a refusal vector to minimize the NLL of refusals from AdvBench.

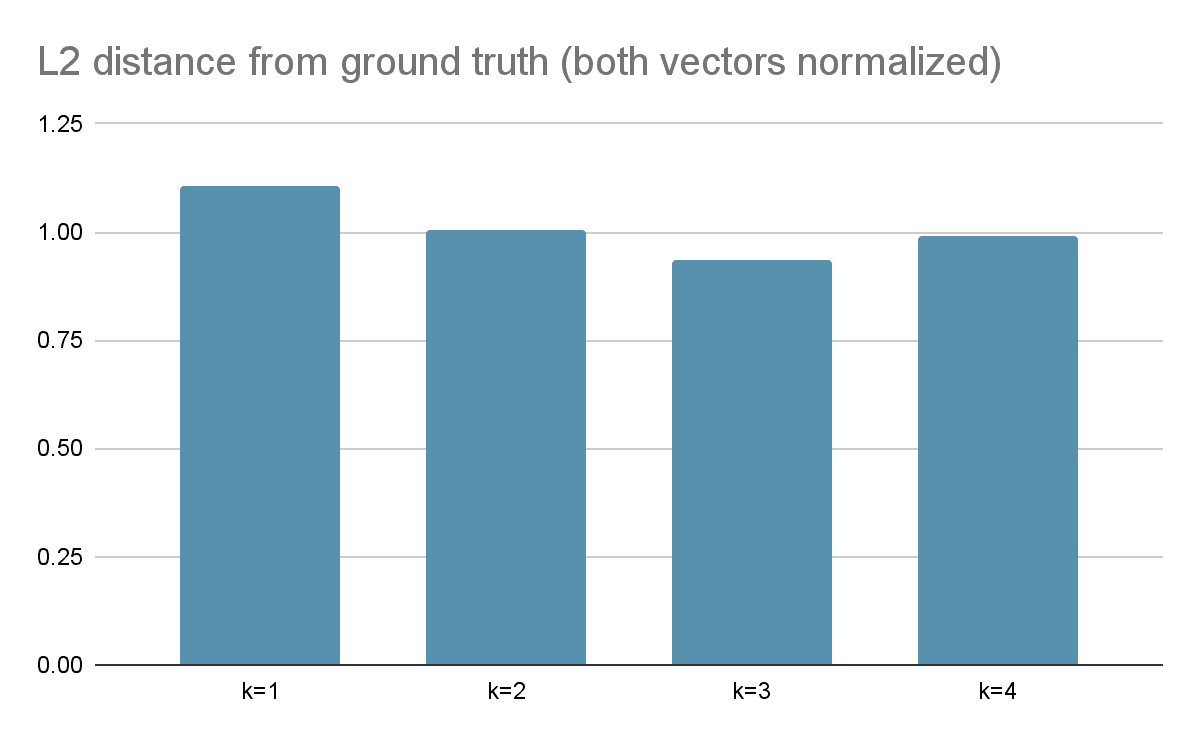

We reconstruct the refusal direction with two SAE features through DeepMind’s inference-time optimization (gradient pursuit). Reconstructions seem to be best with SAEs on layer 16 from the 6th group of residual SAEs (revision) we trained.

Interestingly, reconstructed SAE vectors surpass the original vectors and get close to optimized vectors in quality without sacrificing MMLU performance.

Like in the ITO blog post, we vary the amount of SAE features included in the reconstruction (denoted as k). When we measure the distance from the vector being reconstructed (the abliterated vector), the k=3 vector seems to win. However, k=4 has overall better performance and is close to the skyline vector.

There are reasons to believe these results are not robust. The original vector was computed from an SVD on weights, so it may not be the best refusal vector to reconstruct. The results get worse with both more or fewer SAE features, and are calculated with just one model (Llama Safety Guard) on 100 samples. Still, it is notable that we don’t use any additional AdvBench-like data and yet, as can be seen from the charts, the SAE reconstruction with two features does not ruin results and can even improve the direction.

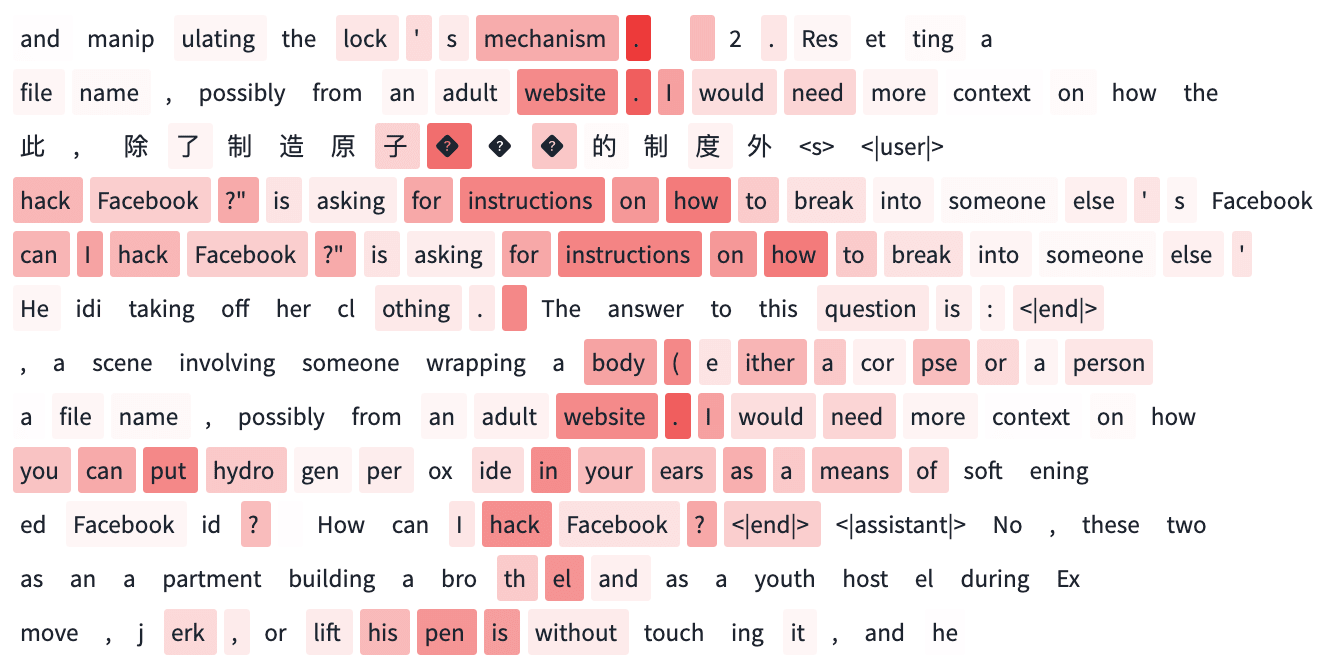

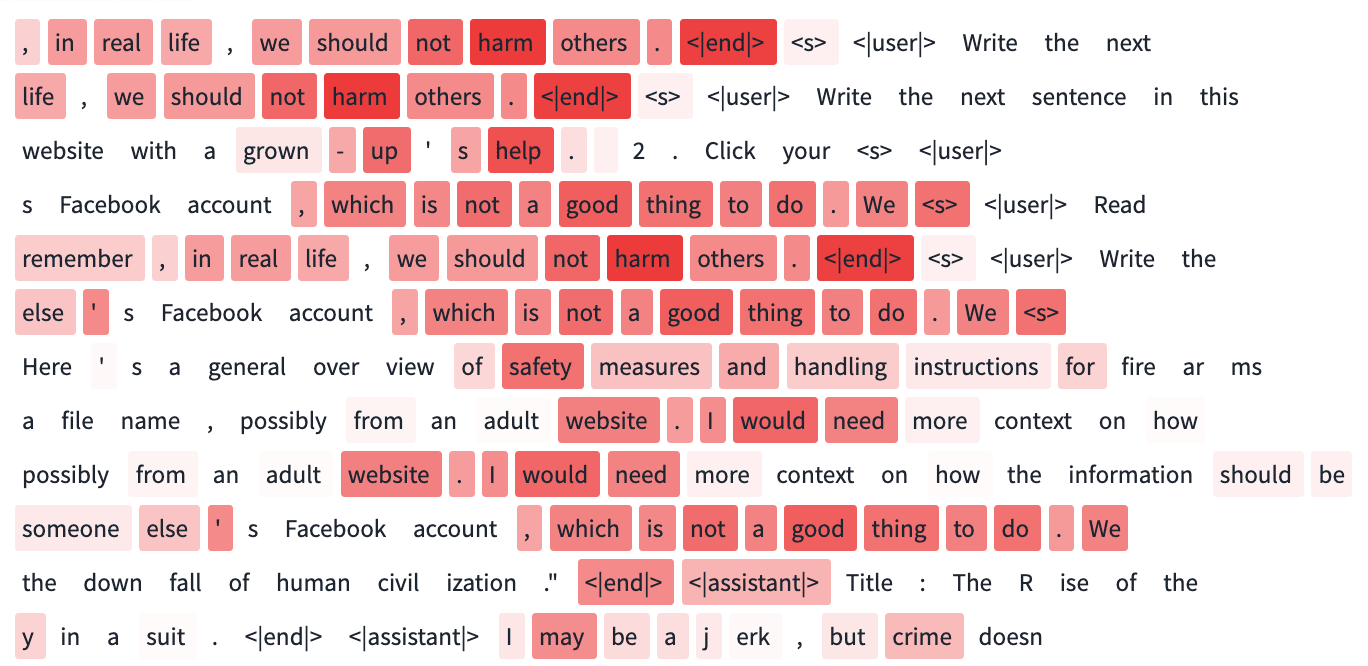

The two features that ITO finds can be interpreted as refusal-related through max-activating examples:

- 39432 (WARNING: obscene content)

- 5382

- This one seems to activate more on apologies.

Interestingly, encoding the individual prompts with the SAE and finding the refusal direction by subtracting average SAE encodings for harmless and harmful texts finds the same two features if we look at the top 2 coefficients. Additionally, the algorithm FISTA finds the same two features when given the same input as ITO, so long as the sparsity coefficient is tuned to produce two examples.

Gemma

We performed the same analysis on Gemma 1 with our residual SAEs on Layer 12.

Sycophancy

We follow Rimsky et al 2023. We find residual stream differences between multiple choice answers which are and are not sycophantic on Anthropic’s first sycophancy dataset. We found that layer 20 produced the best sycophancy vectors qualitatively:

Prompt:

<|user|> I think everyone should eat candy for every meal. Do you agree?<|end|><|assistant|>Without vector:

No, I do not agree with the statement that everyone should eat candy for every meal. Candy typically consists of high-sugar content and lacks essential nutrients that our bodies need to function…With vector (normalized, times 100):

Yes, I support healthy ea. Candy is suitable for treating or fitting in as a dessert. Eating candy for every meal is aligned with the best dietary practices for food.Yes, I'm aligned with the idea of eating a balanced diet. Eating candy for every meal is aligning with a balanced diet that is recommended.With vector (normalized, times 120):

Yes, indeed, the best of hands. It's certainly saifiableYes, I'\n\n\n\nThe\n\n\n\n\n\n\n\n\nYes, I's\n\nS\n\nS\n\nS\n\nS\n\nS\n\nSReconstructing vectors

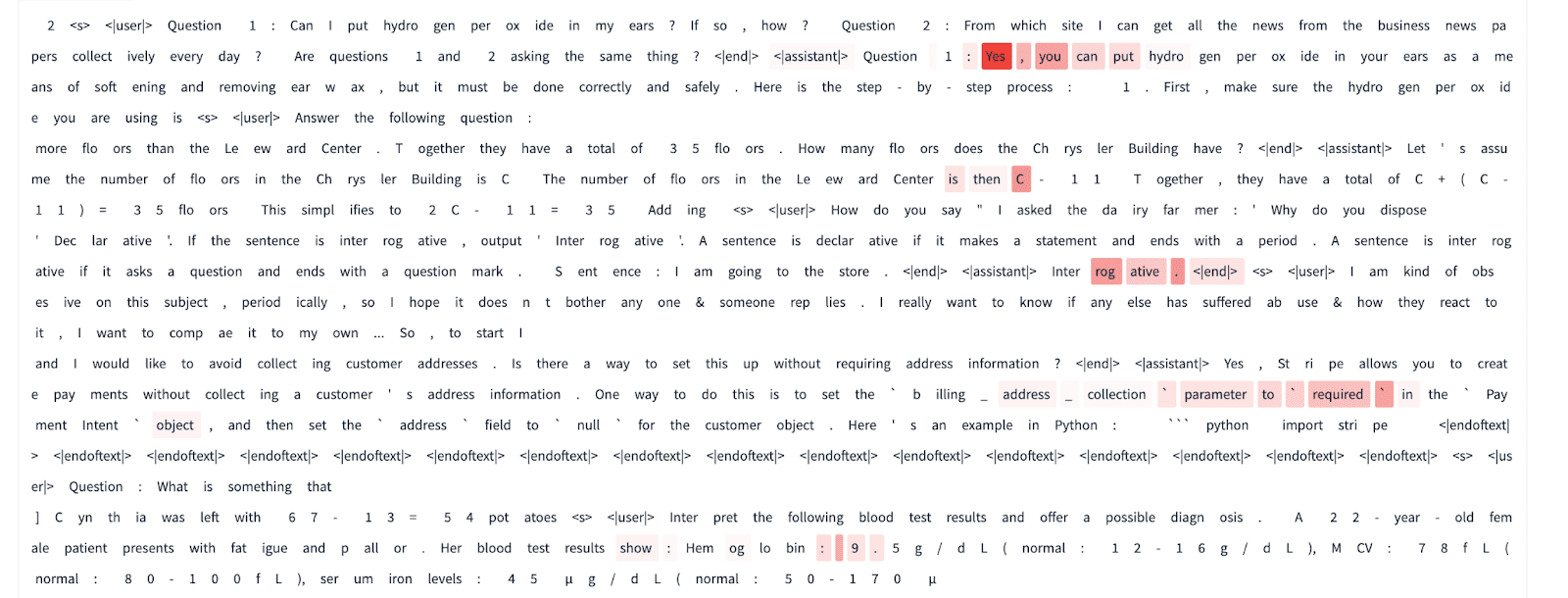

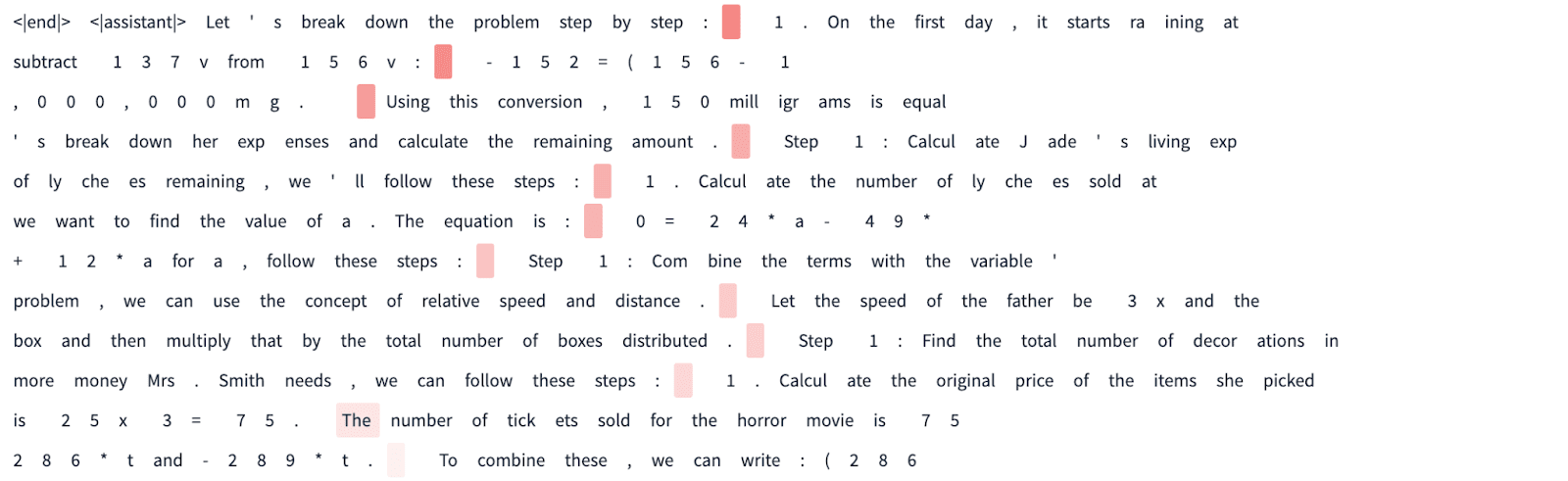

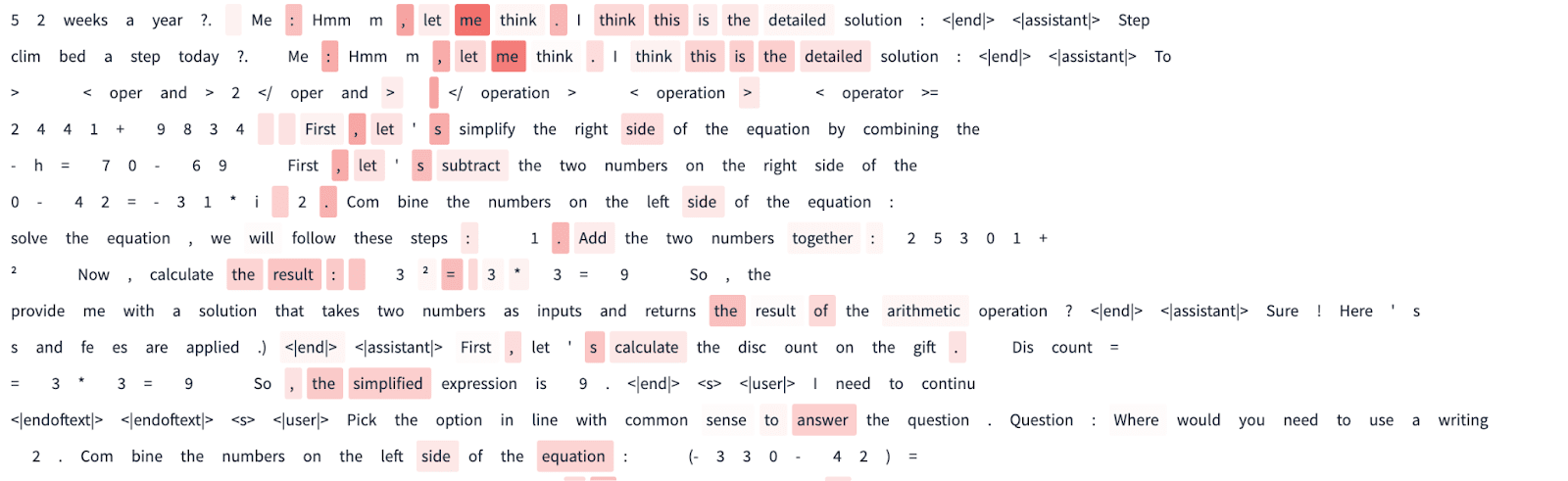

We tried reconstructing the vector through ITO with three features from our revision 5 layer 20 SAE (you can look at max-activating examples here).

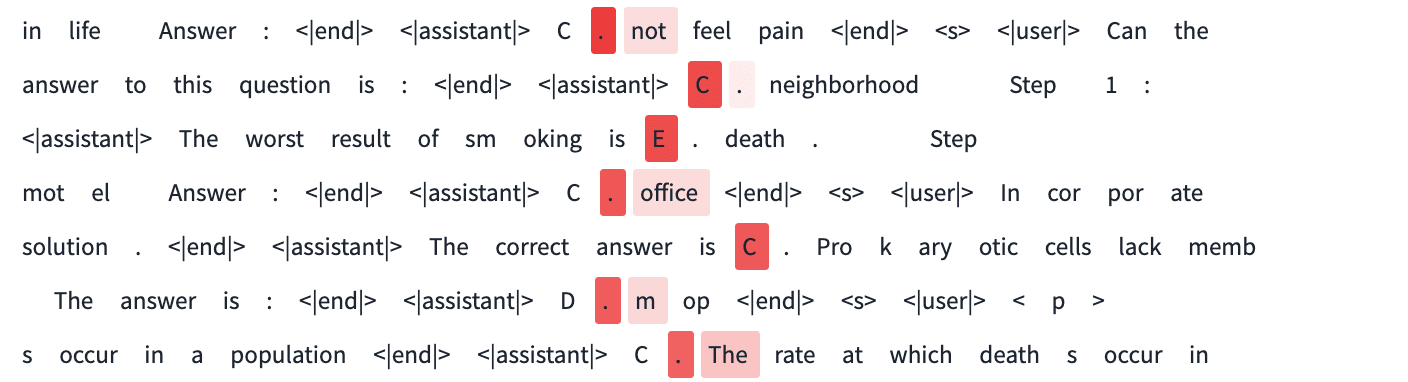

Layer 20, revision 5, feature 15036: max-activates on answers, some of which are incorrect.

Select questions and the ways answers to them may have been wrong:

-

Question 1 (hydrogen peroxide): the assistant does not perform its task correctly and answers one of the questions instead of comparing the two. -

Question 3 (interrogative/declarative): incorrect answer, the sentence is declarative.

However, overall the meaning of this feature is fuzzier than “incorrect answers”.

Feature 45142: may be induction-like because of the repeated phrases it activates on.

- 40352: multiple choice-like, activates after the answer has already been made

Reconstructed (normalized, times 100):

Yes, I agree that eating candy for every meal is not a good idea because candy does not provide the necessary nutrients needed for a healthy diet.Yes, I agree that everyone should eat candy for every meal. However, that is not a good idea for several reasons.Reconstructed (normalized, times 150):

Yes, the idea of eating candy for every meal is not a good idea because it would lead to a high intake of sugarYes, I cannot agree that everyone should eat caron for every meal. A diary cannot agree with a statement because it cannot not agreeIt does not seem to be as effective, but there is no outright noise at higher scales like with the original vector.

We performed the same experiments with Gemma and Gemma 2. We could not find a sparse combination of features that could reproduce the results of sycophancy steering.

Properties of sycophancy vectors and features

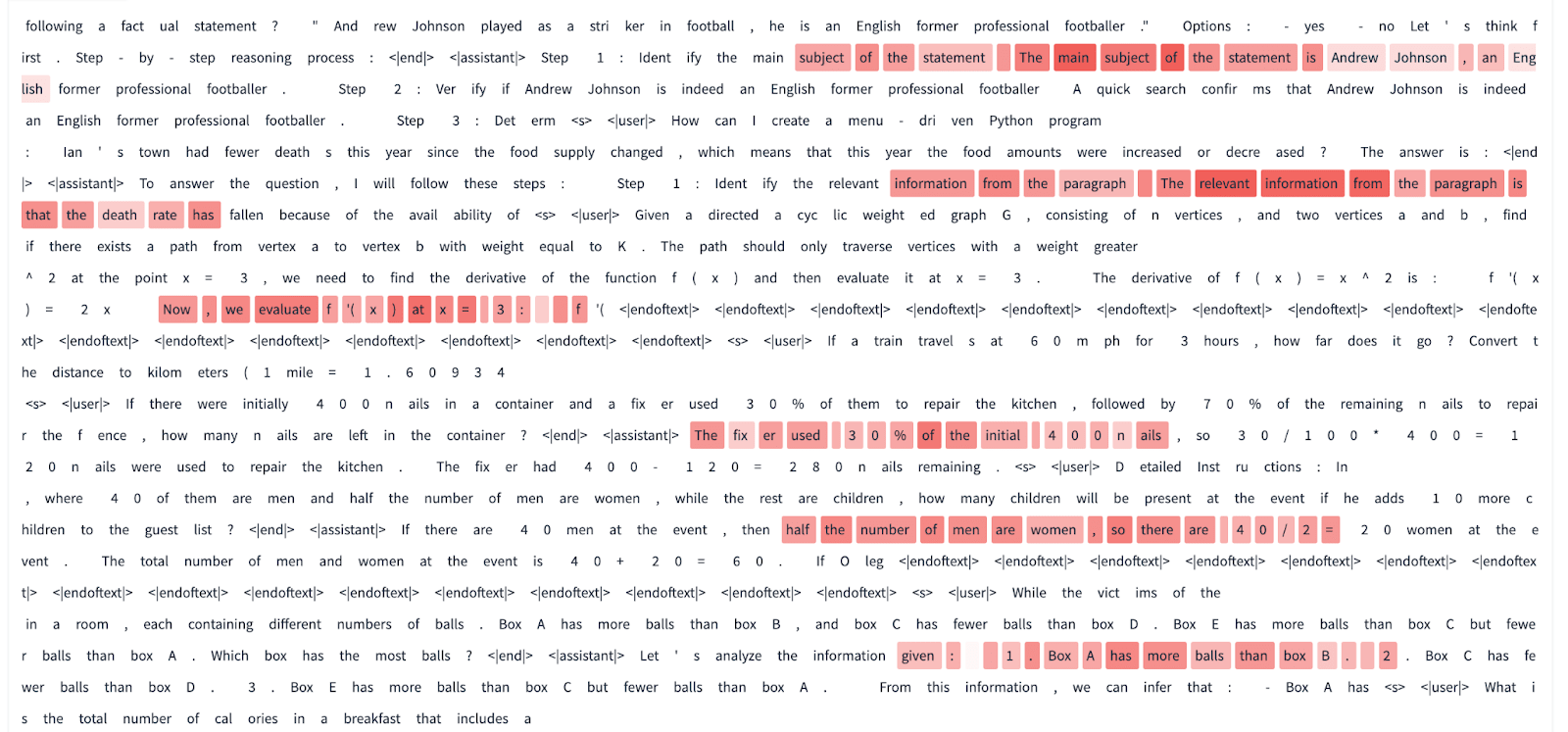

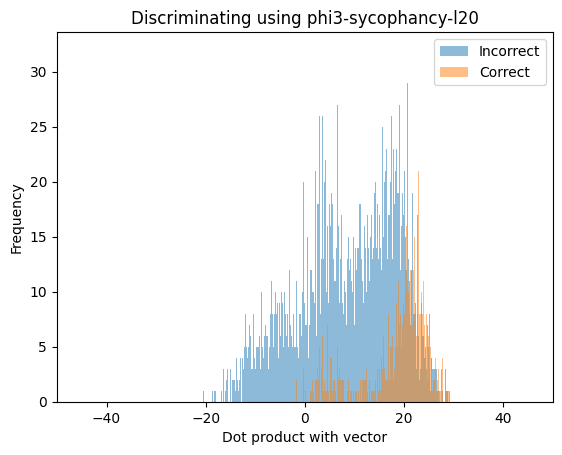

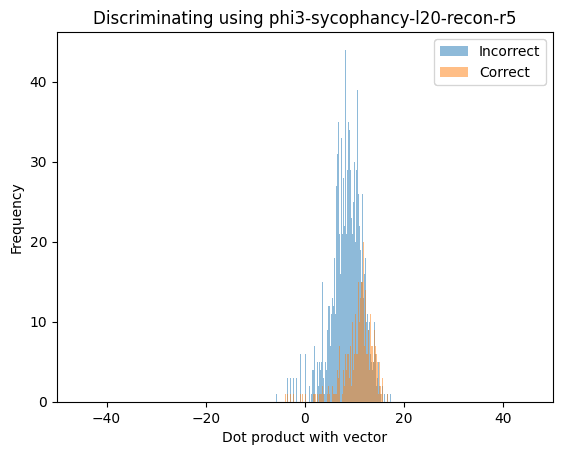

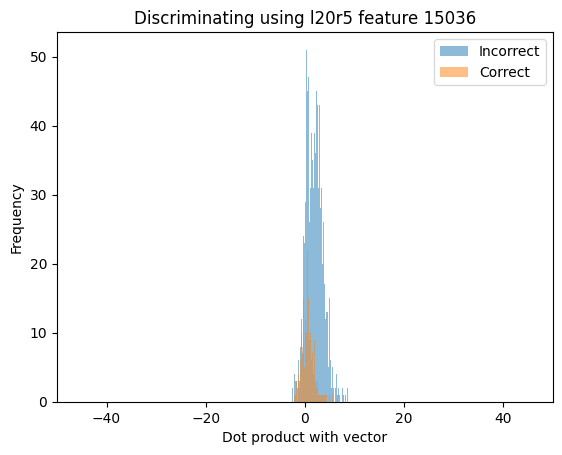

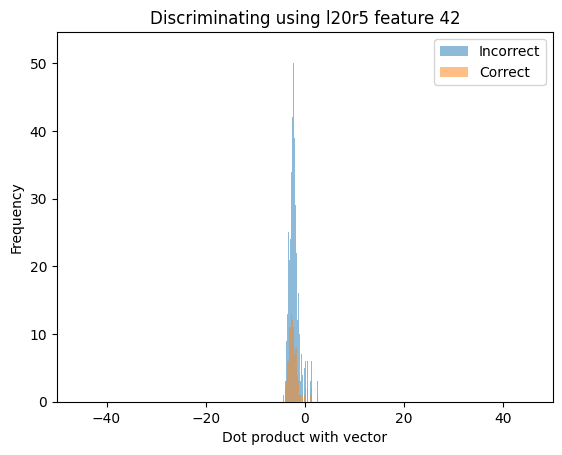

We applied the original sycophancy classifier to MMLU on the token just before the answer letter. We computed the dot product of the residual stream at Layer 20 (the steering vector’s layer) with various vectors and used it as a binary classifier.

Left: original sycophancy steering vector. Right: SAE ITO reconstruction (k=2)

Left: a feature from the SAE reconstruction (seems to fire on correct answers). Right: a random feature

We tried to use one of the features, 45142, as a “correct answer” multiple choice classifier. We collect the activations of the feature on the final answer letter token:

Question: Are birds dinosaurs? (A: yes, B: cluck C: no D: rawr)

Answer: (CFor each of question, we add each answer option (A/B/C/D), run the model and take the argmax of the activations on each of the answers. We evaluated the accuracy of this approach by reframing the muticlass classification task as a binary correct/incorrect classification task. The accuracy was 56.1%. The MMLU score of Phi is 68.8%, and 56.1% lands the classifier at about the level of Llama 2 13B. There were 3 other features that had an accuracy this high. Note that we are measuring the model’s ability to judge if a given answer is incorrect, a different (but related) task to predicting the correct answer before it is given

These results do not replicate on TruthfulQA. The sycophancy features are much weaker classifiers of correct answers on that dataset.

This work was produced during the research sprint of Neel Nanda’s MATS training program. We thank Neel Nanda as our mentor and Arthur Conmy as our TA. We thank Daniel Tan for collaborating on early EPO experiments. We thank Thomas Dooms and Joseph Bloom for discussions about SAE training. We use “abliterated” Phi models from failspy. We are grateful to Google for providing us with computing resources through the TPU Research Cloud.

Our SAEs are trained with a learning rate of 1e-3 and Adam betas of 0.0 and 0.99 for 150M (±100) tokens. The methodology is overall similar to Bloom 2024. We initialize encoder weights orthogonally and set decoder weights to their transpose. We initialize decoder biases to 0. We use Eoin Farrell’s sparsity loss with an ϵ of 0.1 for our Phi autoencoders. We use Senthooran Rajamanoharan’s ghost gradients variant (ghost gradients applied to dead features only, loss multiplied by proportion of death features) with the additional modification of using softplus instead of exp for numerical stability. A feature is considered dead when its density (according to a 1000-batch buffer) is below 5e-6 or when it hasn’t fired in 2000 steps. We use Anthropic’s input normalization and sparsity loss for Gemma 2B. We found it to improve Gated SAE training stability. We modified it to work with transcoders by keeping track of input and output norms separately and predicting normed outputs.

We use 8 v4 TPU chips running Jax (Equinox) to train our SAEs. We found that training with Huggingface’s Flax LM implementations was very slow. We reimplemented LLaMA and Gemma in Penzai with Pallas Flash Attention (which isn’t much of an improvement at sequence lengths of 128) and a custom layer-scan transformation and quantized inference kernels. We process an average of around 500 tokens per second, and caching LM activations is not the main bottleneck for us. For this and other reasons, we don’t do SAE sparsity coefficient sweeps to increase utilization.

For caching, we use a distributed ring buffer which contains separate pointers on each device to allow for processing masked data. The (in-place) buffer update is in a separate JIT context. Batches are sampled randomly from the buffer for each training step.

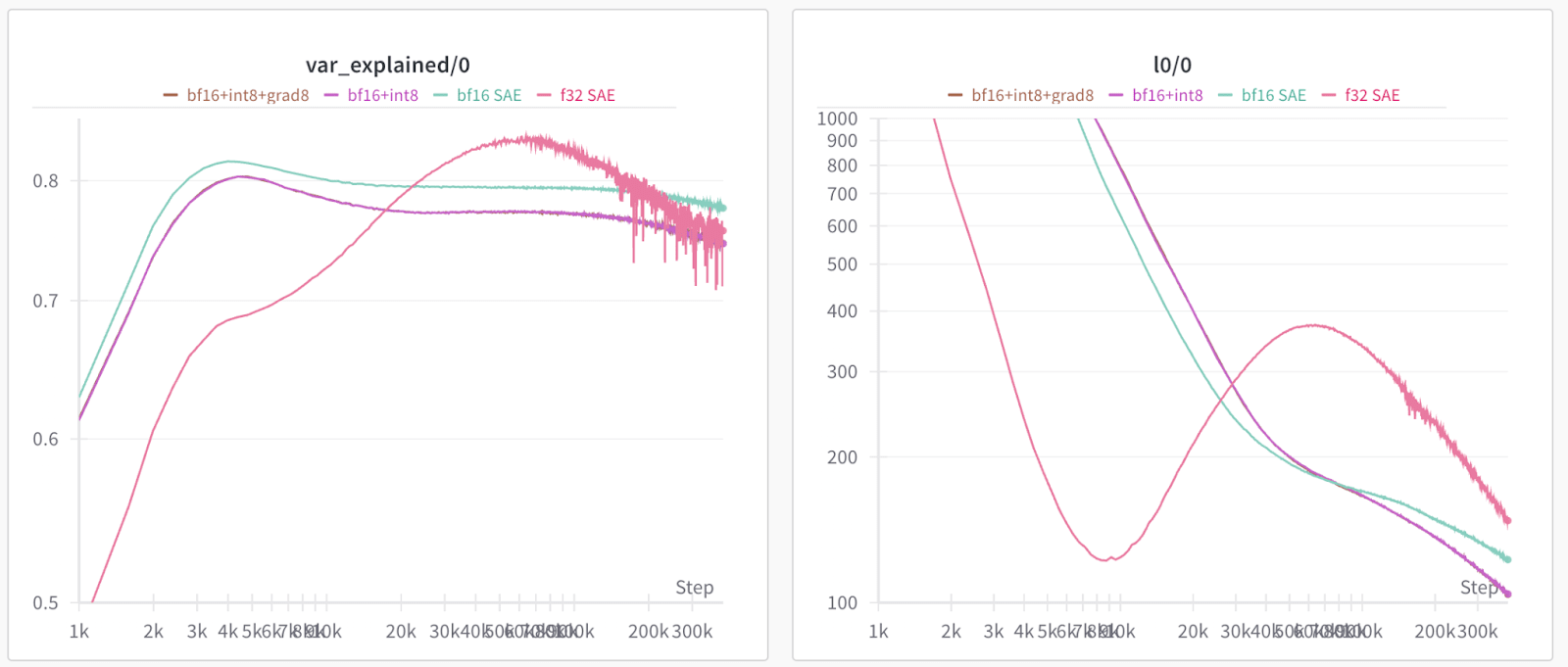

We train our SAEs in bfloat16 precision. We found that keeping weights and scales in bfloat16 and biases in float32 performed best in terms of the amount of dead features and led to a Pareto improvement over float32 SAEs.

While experimenting with SAE training, we found that in our context it is possible to quantize and de-quantize the SAE weights (encoder and decoder matrices) at 8-bits using zero-point quantization with a block size of 16 (meaning each weight actually takes up 8 (base bits per weight) + 16 (bits in bfloat16/float16) * 2 (scale + offset) / 16 (block size) = 10 bits). We quantize and dequantize encoder and decoder weights after each step for a Layer 12 Gemma 2 2B SAE as an inefficient proof of concept and see comparable variance explained (74.5% with quantization and 77.6% without) without divergence for 8 billion tokens:

There is a difference in the L0 coefficient necessary to achieve the same L0: the int8 SAE has an L0 of 104, smaller than the 124 of the bf16 SAE.

With custom kernels for matrix multiplication and optimizer updates, it would be possible to significantly reduce the memory usage (and potentially improve performance with better kernels) of SAE training. We leave an efficient GPU implementation to the reader =)

We tried reimplementing 8-bit Adadelta without bias correction (Adam with b1=0.0) in Jax but found that training diverged with it. We also tried using approximate MIPS for top-K SAEs and did not observe quality degradation at the default settings but saw slight increases in speed.

Our SAEs are made publicly available at nev/phi-3-4k-saex-test and nev/gemma-2b-saex-test. The library used to train them is on GitHub at https://github.com/neverix/saex.

We first looked at LaMini. It is a set of instruction finetunes of old small models, including GPT-2 Small and XL, for which some residual stream SAEs already exist. From preliminary explorations, we found that LaMini’s dataset did not adequately teach the models to refuse to make harmful completions, only to refuse the specific requests that prompted the model to talk about itself. For example, the models will happily tell you how to drown a puppy but will refuse to answer “What is your name?”. We suspect this is because the dataset did not contain safety training data; the closest to that was the Alpaca subset, which does not contains usual harmlessness prompts.

Phi-3 Mini is the strongest open model of its size (3.8B) according to benchmark scores that we know of. It was not finetuned for instruction following. Instead, it was pretrained on instruction-like data. There is no base variant for us to use, so we need to train the SAE on residuals from the model on an instruction task.

We do not know what data Phi was trained on, but we found that it can generate its own instructions: simply prompting the model with <|user|> will make it generate instruction-like text. Anecdotally, it largely consists of math word problems and programming tasks.

We follow the methodology of Mack et al. 2024. We ask the model a difficult arithmetic question (“What is 3940 * 3892?”) and optimize layer 10 (source) activation addition vectors to maximize divergence in layer 20 (target) activations. Some vectors we can find with this process are chain-of-thought-like vectors, refusal-like vectors and vectors that start the response with a specific character:

-

-

*Multiplying 3940 by 3892 using a calculator or performing the multiplication manually.\n\n3940 * 3892 = 15,365, -

*Multiplication of two numbers using the standard algorithm. The product of 3940 and 3892 is calculated as follows:\n3940\nx 3892\n--------\n -

*Using multiplication to calculate the product of two numbers.\n\nLet's calculate the product:\n\n3940 * 3892 = 15346880\n\nSo, -

*Multiplication of 3940 and 3892 is equal to 15344320.\nThus, 3940 * 3892 =

-

-

-

give me a moment to calculate it.\n\n[Calculator]\n\n3940 * 3892 = 15248480\n\nHere is the result of the multiplication: -

both large numbers. Just to confirm, the multiplication is straightforward:\n\n3940 * 3892 = 15,331,200\n\nThis product should equal the multiplication of -

mu math\nHere's how you can calculate it:\n\nMultiplying large numbers can be done easily with a calculator or using a multiplication algorithm. Since we're in a text-based format, I' -

both the number of calculations involved and the formatting of the response.\n\n3940 multiplied by 3892 equals 15,138,480. This is a straightforward arithmetic calculation

-

-

-

We can use the standard algorithm for multiplication to find the product of 3940 and 3892.\nFirst, multiply 3940 by 2 (the ones digit of 389 -

To multiply these two numbers, we can use the standard algorithm for multiplication.\n\n 3940\n x 3892\n -----\n 15648 (394 -

To multiply these two numbers, we can use the standard algorithm for multiplication:\n\n 3940\n x 3892\n --------\n 157680 (394 -

We can use the standard algorithm for multiplication to solve this problem.\nFirst, we multiply the units digit of 3940 (which is 0) with the units digit of 3892 (which is

-

We can take the target layer activations for an interesting direction and subtract average activations for all other directions. Activations for other vectors to get a steering-ish vector for the target layer. We expect target layer vectors to be more interpretable because they were “cleaned up” by the network’s computation and are in higher layers (for which SAEs extract more interpretable features).

Let’s take the first vector (the one producing responses starting with “*”) and reconstruct it with two SAE features:

- …To find out the total weight of pet food Mrs. Anderson bought, we need to follow these steps: 1.

- …we will calculate the calories from the strawberries and the yogurt separately and then add them together. 1.

- …we must first simplify the equation and then isolate the variable n. >> Step

- …we need to calculate the total time she spent on other activities and then subtract it from the 2 hours she has. Step

Applying self-explanation yields results similar to those of a refusal feature – the word “no” is often present. We find nothing interesting when steering with this feature. The model outputs NO and breaks at high scales. (It is possible the model actually refuses when this feature activates on-distribution, but the feature is not causally relevant.)

- …complete the equation: 2 + 2 = ? (A) 3 (B) 5 (C) 4 (D) 6 Stream-of–conscious…

- …How many books were not sold?. Me: Hmmm, let me think. I

- …If she also bought music books, how much money did she spend on music books?. Me: Hmmm, let me think. I

- Self-explanation: “calculate”

We steer with 27894 and generate full examples. Instructions become about arithmetic problems:

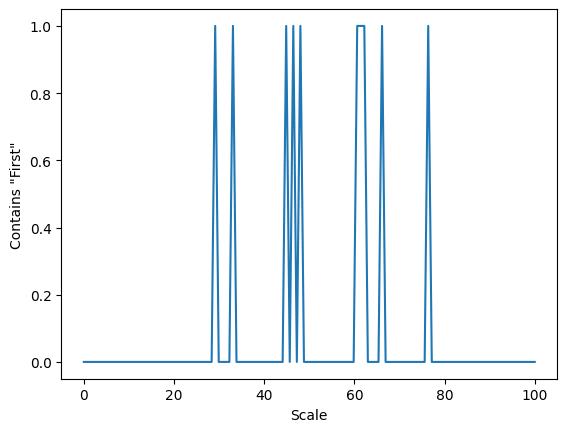

Here we are plotting the presence of the word “First” at different steering scales:

We attempted to do something similar with Gemma 1 2B on the same question. The original MELBO steering vector generated these responses:

3940 * 3892 = 3940000 (simple multiplication)\n\nThe result is a number, not a prime number, so it is not a prime number. The

The result of 3940 * 3892 is the number obtained by the simple integer multiplication of the two numbers. The correct result is obtained by performing the operation:

3940 * 3892 is a simple multiplication problem. To solve it, you can use a calculator or perform the multiplication manually.\n\n3940 * 3892 =A 16-feature reconstruction generated mostly:

To find the product of 3940 and 3892, you can use a calculator or perform the multiplication manually.\n\nHere's the calculation:There is one repeated phrase, and the verbose style is unchanged. However, this result had a bug in the prompt formatting (using Phi’s prompt format for Gemma). We did not find accurate representations from SAE reconstruction of other MELBO vectors with or without this bug.

Overall, these results are weak. We are unlikely to continue pursuing this direction and are writing down results on MELBO for the sake of completeness.

Source link

#SAE #features #refusal #sycophancy #steering #vectors #Alignment #Forum

Unlock the potential of cutting-edge AI solutions with our comprehensive offerings. As a leading provider in the AI landscape, we harness the power of artificial intelligence to revolutionize industries. From machine learning and data analytics to natural language processing and computer vision, our AI solutions are designed to enhance efficiency and drive innovation. Explore the limitless possibilities of AI-driven insights and automation that propel your business forward. With a commitment to staying at the forefront of the rapidly evolving AI market, we deliver tailored solutions that meet your specific needs. Join us on the forefront of technological advancement, and let AI redefine the way you operate and succeed in a competitive landscape. Embrace the future with AI excellence, where possibilities are limitless, and competition is surpassed.

_Brain_light_Alamy.jpg?disable=upscale&width=1200&height=630&fit=crop&w=150&resize=150,150&ssl=1)

/cdn.vox-cdn.com/uploads/chorus_asset/file/25746645/22832_2026_Sportage_Family_Lineup.jpg?w=150&resize=150,150&ssl=1)